Computer Fundamentals - The Great Coordination of Electricity

The invention of the computer is the next step change in the progress of our society and now forms an exponential layer on top of electricity that helps power everything in our world.

The ancient Mesopotamians gave us the first written symbols on clay tablets nearly 5,000 years ago. Eventually these symbols evolved to represent physical sounds to match spoken language, allowing more complex ideas to be formed by combining symbols into words. Hundreds of written and spoken languages have come since, and writing has evolved from clay tablets, onto early forms of paper, and eventually typewriters and other mechanical apparatuses. (post image a Photo by Adi Goldstein on Unsplash)

Today, our entire world runs on top of the data and information that is represented by pulses of electric current and stored electrons organized neatly among complex abstraction layers.

We already live in a metaverse. One with an interconnected world of machines that improve exponentially over time. We humans, through decades of research development and ever increasing abstractions, have created a way to program and manipulate the flow of electrons to represent the physical world through space and time. We can harness energy into electricity into the very fine tuned detail of a computer program with just a few keystrokes.

The invention of the computer is the next step change in the progress of our society and now forms an exponential layer on top of electricity that helps power everything in our world.

The exponential increase in world GDP correlates with the invention and rise in electricity production and the invention and exponential growth in computing. Although difficult to quantify, the digital economy is now ~15% of world GDP and has grown at a rate of 2.5x the rest of the economy.

This article serves as an overview of computing basics and a foundation for future analysis, prediction, and understanding of the economics, valuations, and trends within the industry. In this article we review the:

- Basics of binary data and computing

- History of computing (and contextual insight)

- Exponential indicators of past, present, and future computing

Part I - Binary Data

Computers perform calculations in a fetch, decode, execute cycle, which occurs billions of times per second (today). And the language that all computers understand is binary information, where everything can be represented by digital information (binary 1’s and 0’s). A lightbulb being on or off in a circuit is one of the easiest ways to understand the boolean ‘On/Off’ or ‘True/False’ nature of binary data. Early computers with limited circuitry and memory and compute would be able to combine circuity (outlined below) to process 8 bits (a bit represents a 1 or 0 and is the smallest representable piece of information).

Morse code represents a great example of early binary data. Each of the English characters are represented by a series of dots and dashes - or 1’s and 0’s.

With clever abstractions, all numbers, text, images, video, and sound can be turned into (often massive) series of 1’s and 0’s. This video here from Code.org explains all things binary and for example how the Instagram photo on your phone can be represented in binary.

And of course, the incredible folks at Crash Course (referenced *many* times throughout this post) have a great video for how to represent various file formats in binary (txt, wav, bitmap).

Part II - Computer Basics

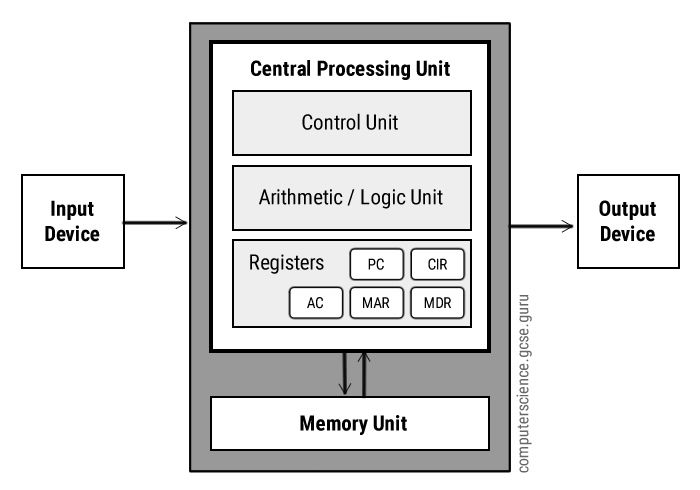

Armed with an intuition of how all information can be represented into 1’s and 0’s we start the process to understand the main parts of a computer in closer detail. The information presented below makes up the von Neumann Architecture that is used in most modern computers today. Much of the information serves as a summary of the *incredible* video series from Crash Course on the various components and parts of a computer, so that we can in turn contextualize and analyze the exponential progress associated with each piece of the computing pipeline.

Logic Gates

At the most basic level, everything in a computer eventually ends up as a 1 or 0. The way a computer fetches, decodes, and executes all data is all dependent on a series of 1’s or 0’s through a circuit. The most fundamental layer of a computer is a logic gate. By arranging a circuit with a gate (or multiple gates), a 1 or 0 can be represented in a calculation. An OR Gate or an AND Gate can be seen in the diagram here:

With some additional engineering and adding different types of circuits, a Gated Latch can be used to persist a bit (a 1 or a 0), which maintains the state of electric current that can be later accessed to know if a bit is a 1 or 0 (computer memory).

In all of these cases, the ‘input’ or charge to trigger the gate to open or close would be some sort of programmatic intervention. In the early days of computing, this could have been the manual flipping of a machine. This input then became punch cards or another form of input (such as a keyboard). And now we have programs that are pre-installed or downloaded and executed on a computer to provide electrical pulses to ‘open or close’ the gates.

The most basic gates, when combined, can form things such as addition units or subtraction units (such as an 8 bit adder). This abstraction continues to form advanced circuits capable of advanced computation (multiplication, division, etc).

Early logic gates were made with electro-mechanical parts, which later evolved to vacuum tubes, and eventually transistors.

Arithmetic Logic Unit (ALU)

With enough abstraction and combination of various logic gates, an Arithmetic Logic Unit (ALU) is created. An ALU is an abstraction over logic gates that can take two input integers, an operation code (such as add or subtract), and create an output.

Registers, Multiplexers, and Memory (RAM)

A register is another abstraction, this time on gated latches. A group of gated latches are combined to hold a single number of bits. Latches can be arranged in a matrix form - managed by a multiplexer. In this way, each gated latch (which stores electrical charge representing a 1 or a 0) is given an address - a row and a column number. These registers are then combined together into memory units. And again these memory units are grouped together and abstracted into an entire Memory Module, known as Random Access Memory (RAM)

Central Processing Unit (CPU)

Moving up the abstraction layers, we can now add together the various gates, registers, and memory units (which again are abstractions on top of logic gate circuits) to form an entire Central Processing Unit (CPU) which will orchestrate all operations of a computer. The CPU ‘talks’ between various registers and memory, and leverages the ALU for computation.

The Control Unit of the CPU has two registers - the instruction register and the instruction address register - that maintain the state of the current instruction that is being performed. The control unit serves as the orchestrator and keeps state as a program is run in a computer.

Also within the CPU is the Clock, which is an electrical component that generates pulses of electric current on a regular interval. Each clock cycle represents a new operation. Computers perform actions in an instruction cycle:

(All of the below is from wikipedia)

Fetch: The next instruction is fetched from the memory address that is currently stored in the program counter and stored into the instruction register. At the end of the fetch operation, the PC points to the next instruction that will be read at the next cycle.

Decode: During this stage, the encoded instruction presented in the instruction register is interpreted by the decoder. Any required data is fetched from main memory to be processed and then placed into data registers.

Execute Stage: The control unit of the CPU passes the decoded information as a sequence of control signals to the relevant functional units of the CPU to perform the actions required by the instruction, such as reading values from registers, passing them to the ALU to perform mathematical or logic functions on them, and writing the result back to a register.

Memory & Data Storage

We reviewed Random Access Memory in the section above. This type of memory allows for fast access from the CPU and involves the coordination of millions of electrical pulses stored in latch gates forming 1’s and 0’s that can be retrieved. If the electricity to a computer is turned off, all of the data stored in RAM is lost as no electric current is persisted.

For persistent data, offline storage is required. The form factors can be different, and today this is likely a Hard Drive, Flash Drive, or Solid State Drive (SSD). Whatever the form factor, data is still persisted as a binary 1 or 0, represented by either a magnetic charge, a magnetic direction, or a trapped electron (SSD). Data storage is growing exponentially (as we will see in an upcoming section below) and the technology is very complex. For a deeper insight into how SSDs work, this video is a great resource:

Part III - Operating Systems, Programs, & Computer Languages

It is mind boggling to understand just how far we have come with the evolution of computer software. The first programs were stacks (and more stacks) of punch cards. Operations and data inputs were all literally punched onto index cards and fed manually into a computer, where the computer would interpret the punch codes, perform the operations, and then feed back output as punch cards (that would need to be interpreted again by humans). The US Air Force Sage program in 1955 consisted of 62,500 punch cards (5 MB)

Computer programs eventually evolved to incorporate external input (from mechanical switches, keyboards, etc). The first operating systems enabled the same program to be run on different computers (write once, run everywhere). Operating systems also served as coordination on the computer so that multiple programs could be run, and ‘long running’ processes such as printing output did not take up all of the available compute power. Portability of programs and asynchronous operations unlocked even greater productivity.

The very first programming languages required direct translation into machine code (1’s and 0’s). Additional programs were created to assemble higher level programs, written in a more ‘english style’ (if, loop, jump, add, subtract) into machine code. This process has continued and we now have hundreds of languages, compilers, interpreters, and assemblers.

And now with nearly every programming language are a multitude of frameworks (ie Rails for Ruby, .NET for C#) and templates for bootstrapping the development of a program or application. Software exists to facilitate shared coding development, deployment, and testing. Libraries are available across all languages and the reusability of various code is as easy as downloading a package and adding an ‘import’ or ‘using’ statement to include millions of lines of code, all abstracted away.

using System;

using System.Collections;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Net;

using System.IO;

Programming today is a web of libraries and packages, all put together on top of an enormous pyramid of abstractions all the way down to the manipulation of various electrical charges and electrons.

Part IV - The History and Milestones of Computing

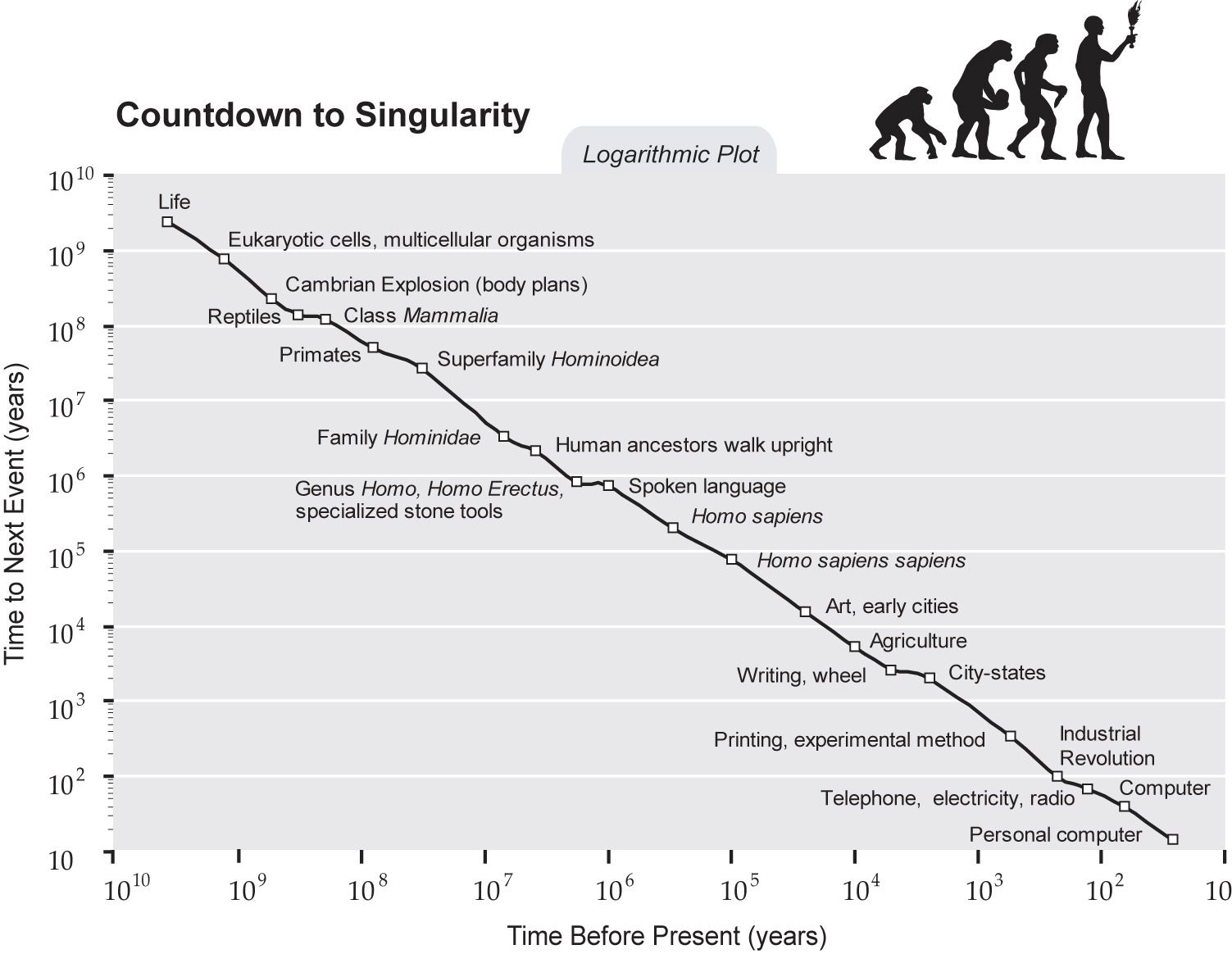

We have covered pieces of data, computers, and programming languages and how each works. The massive layers of abstraction involved in computing is astounding - it is hard to comprehend just how far we have come as a society. Only 12,000 years ago did we become an agrarian society (~400-500 generations).

6 generations ago we invented electricity (1880’s). The best selling PC in 1975 was the Altair 8800 and required manual toggling of flipping bits. In the 2 generations since then we now have computers capable of leveraging artificial intelligence to drive a car autonomously. Only ~2% of human history has been alive between our transition to agrarian society and the invention of electricity. And just two generations of humans have seen a personal computer.

This lens of history is important for understanding just how exponential the growth (and price performance) of computing is. By understanding the timeline for innovation, what the early days looked like, and how far we have come, we can extrapolate the lens of history to analyze the present (where innovations in AI and crypto currency look to have many similarities), and try to predict the future. In 1977, IT made up approximately 4.2% of GDP. Although difficult to quantify, the digital economy is now ~15% of world GDP and has grown at a rate of 2.5x the rest of the economy. (And GDP and GDP per capita have also been growing exponentially since the invention of electricity).

A look at some of the early computers and milestones are outlined here:

If we look graphically at the major milestones, we can see just how condensed the history of computing is. We are truly in the exponential age of information.

3000 BC - 1880 - Mechanical Computers

The abacus was the first computing device (also from the ancient Mesopotamians). It was used to perform arithmetic and act as a ledger. Since then computing evolved as a manual and mechanical process all through the 1800’s. Examples include the slide rule, loom, and various tabulation machines. Herman Hollerith invented the first tabulation device for the 1890 census that relied on completing a ‘circuit’ by having a pin drop into mercury for different values (from punch cards). Hollerith’s Tabulating Machine Company later merged with other companies to form IBM.

1880 - 1950 - Electro mechanical Computers

Early computers had logic gates that consisted of electro-mechanical relays and vacuum tubes. An electric current would ‘attract’ a piece of metal and complete the constructed circuit. Vacuum tubes served as a similar way to control the flow of electricity between gates and circuits.

1950 - present - Silicon & Transistor Computers

The first working transistor was created in 1947 and replaced electro-mechanical relays and vacuum tubes in computers (and all electric devices). Transistors serve as a semi-conductor and an electrical pulse can active or deactivate their ability to conduct electricity (and complete a circuit and logic gate). Transistors today are an integral part of electronics.

1975 - present - Personal Computers

The Altair 8800 was released in 1975 and offered a way for computer enthusiasts to manually program the computer by flipping a series of switches and inputs. This was a state of the art computer *less than 50 years ago*. Computers of the 1990’s were clunky devices that offered a separate monitor and CPY with floppy disk drives and CD-ROMS. Today, laptops and high powered gaming computers are ubiquitous.

2007 - present - Smart Phones

The first iPhone was released in 2007, and now more than 6 billion people regularly use smartphones.

Part V - The Exponential Growth and Trends in Computing

Just as history indicates, the computer revolution continues to increase at an exponential pace. A snapshot comparison between one of the first and most powerful computers created in 1956 vs a made for the masses MacBook in 2021 reveals the exponentiality in just specs:

The ENIAC in 1956:

- Parts: 18,000 vacuum tubes; 7,200 crystal diodes; 1,500 relays; 70,000 resistors; 10,000 capacitors; approximately 5,000,000 hand-soldered joints.

- Clock Speed: 100 KHz (100k operations per second)

- Weight: more than 27 tons

- Physical Space: 1,800 sq ft

- Electricity Consumption: 150 kW

- Cost: today’s equivalent of $6,000,000

A top of the line brand new MacBook in 2021:

- CPU: ~56 billion transistors, 16 GB RAM, 2 TB Storage

- Clock Speed: 3.2 GHz (3.2 billion operations per second)

- Weight: 3.5lbs

- Physical Space: ~1 sq ft

- Electricity Consumption: ~40W (peak)

- Cost: ~$2,000

Computers are enormously complex, and there is no single metric that can encapsulate all trends and performance. Each of the below metrics serves as insight into just how far computing has come in the last century. The metrics are broken out into categories of computer performance, computer prices, and computer productivity enablement.

I - Exponential Computer Performance Increases

The manufacturing techniques, architecture, processing speeds, and memory/storage capacities of computers continue to increase exponentially. Below are some examples of this exponential growth.

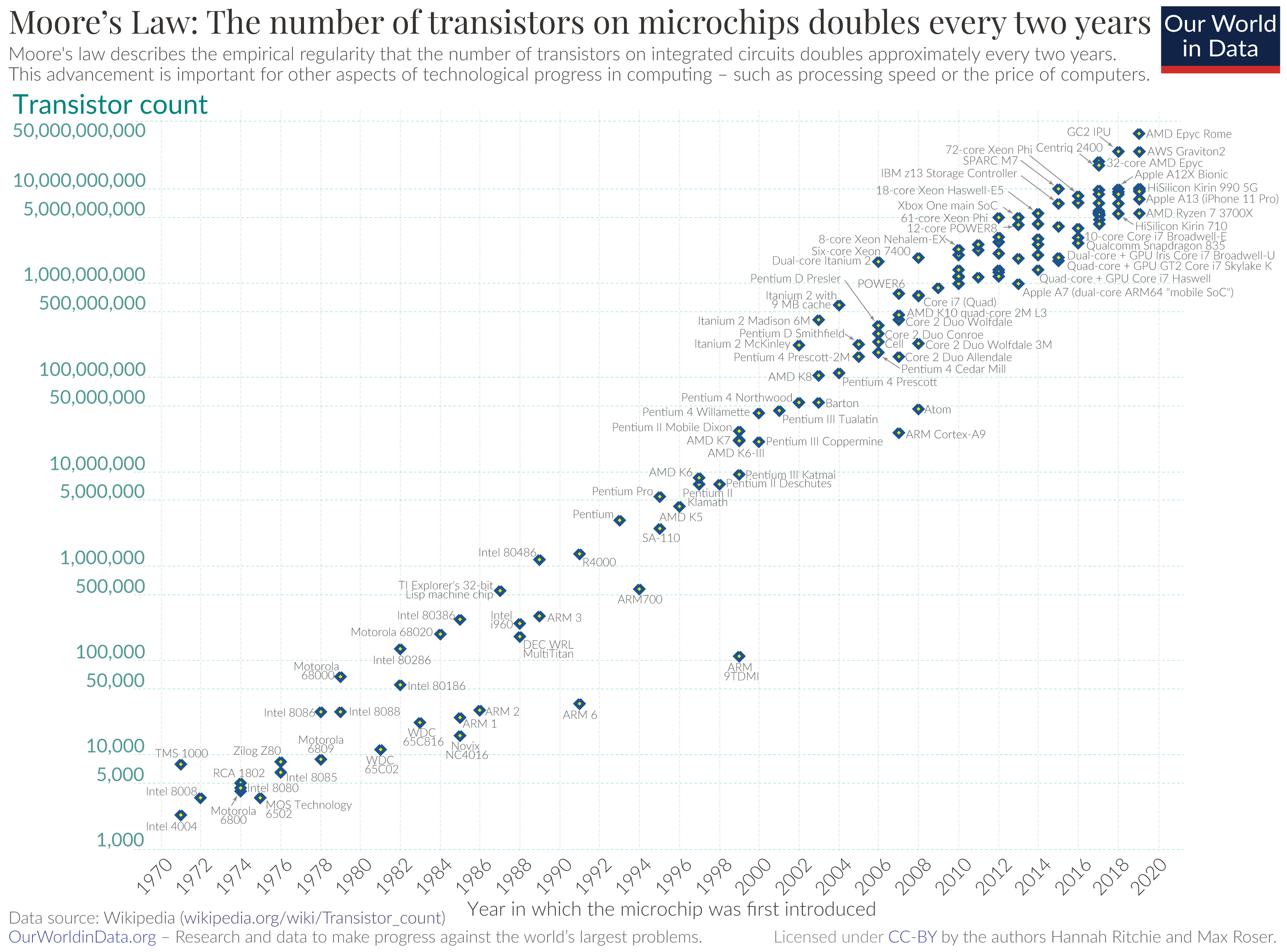

Number of Transistors on an Integrated Circuit - Moore's Law

Gordon Moore proposed his 'law' that the number of transistors on a circuit would double every ~18 months -2 years. A transistor is the building block of a logic gate and represents one of the lowest abstractions of a computer. The first transistor based computers came out in the 1950's, and since then Moore's prediction has held true.

The number of transistors indirectly gives context for how many operations can be performed on a computer in a given second (more transistors -> more logic gates -> more processing). Through 2020, this prediction has continued to hold true and we have seen an exponential rise in transistor counts on computer circuits. The first transistor computers of the 1970's had microchips with ~2k transistors. Computer microchips today have upwards of 50 billion transistors.

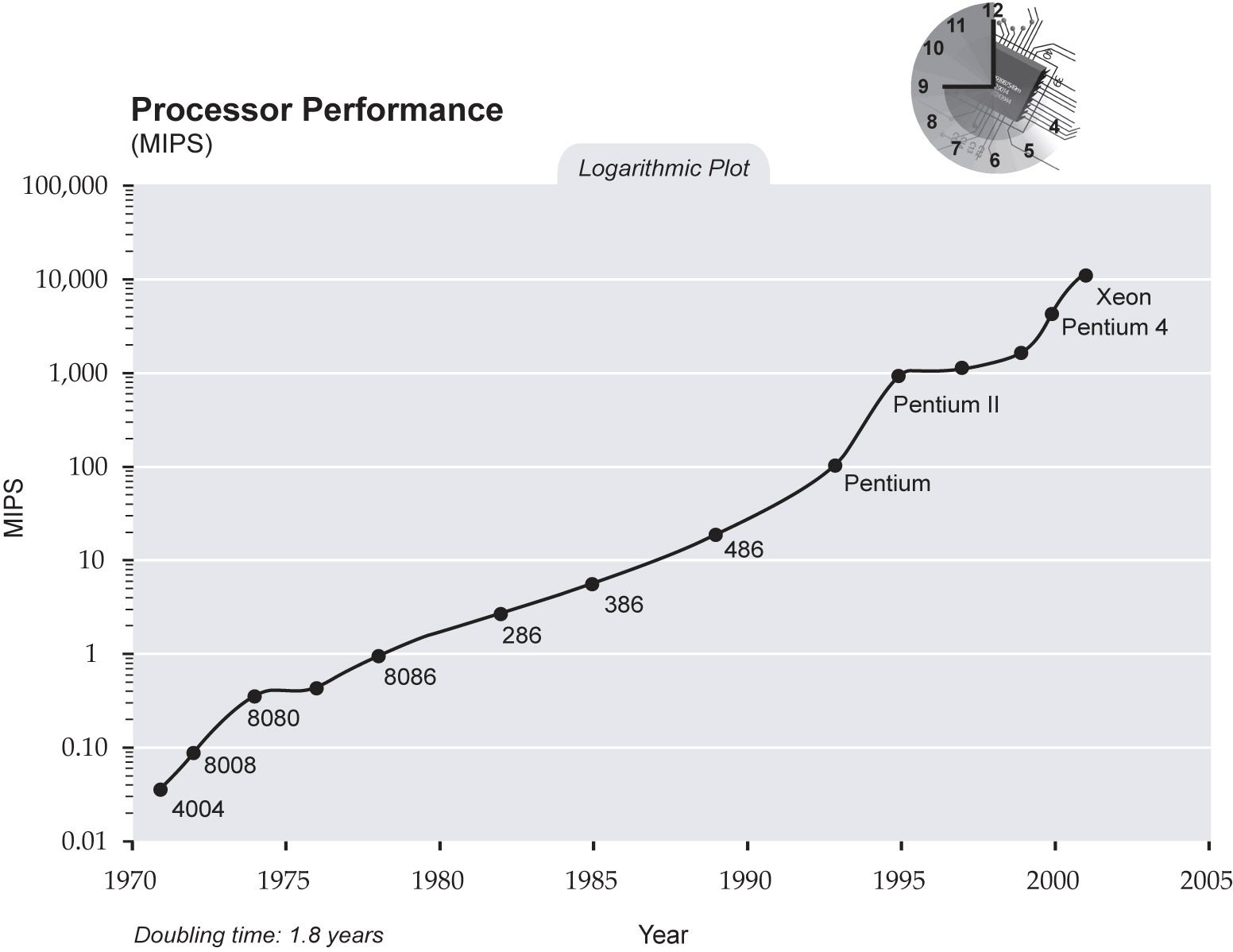

(Millions of) Computer Instructions Per Second - MIPS

Calculating exactly how many instructions per second a CPU can process is not an exact formula as different operations, hardware, and other variables (caching, pipelines, architectures) can cause inconsistencies when comparing different chips. However, MIPS offers another abstract lens through which we can view the overwhelming exponentiality of CPU speed increases, as evidenced in the (log) chart below. In 1978, the Intel 8086 was capable of 1 Million Operations per second. In 2020, the AMD Ryzen Threadripper 3990X can perform 2.3 Trillion instructions per second (2.3 million x increase over ~40 years).

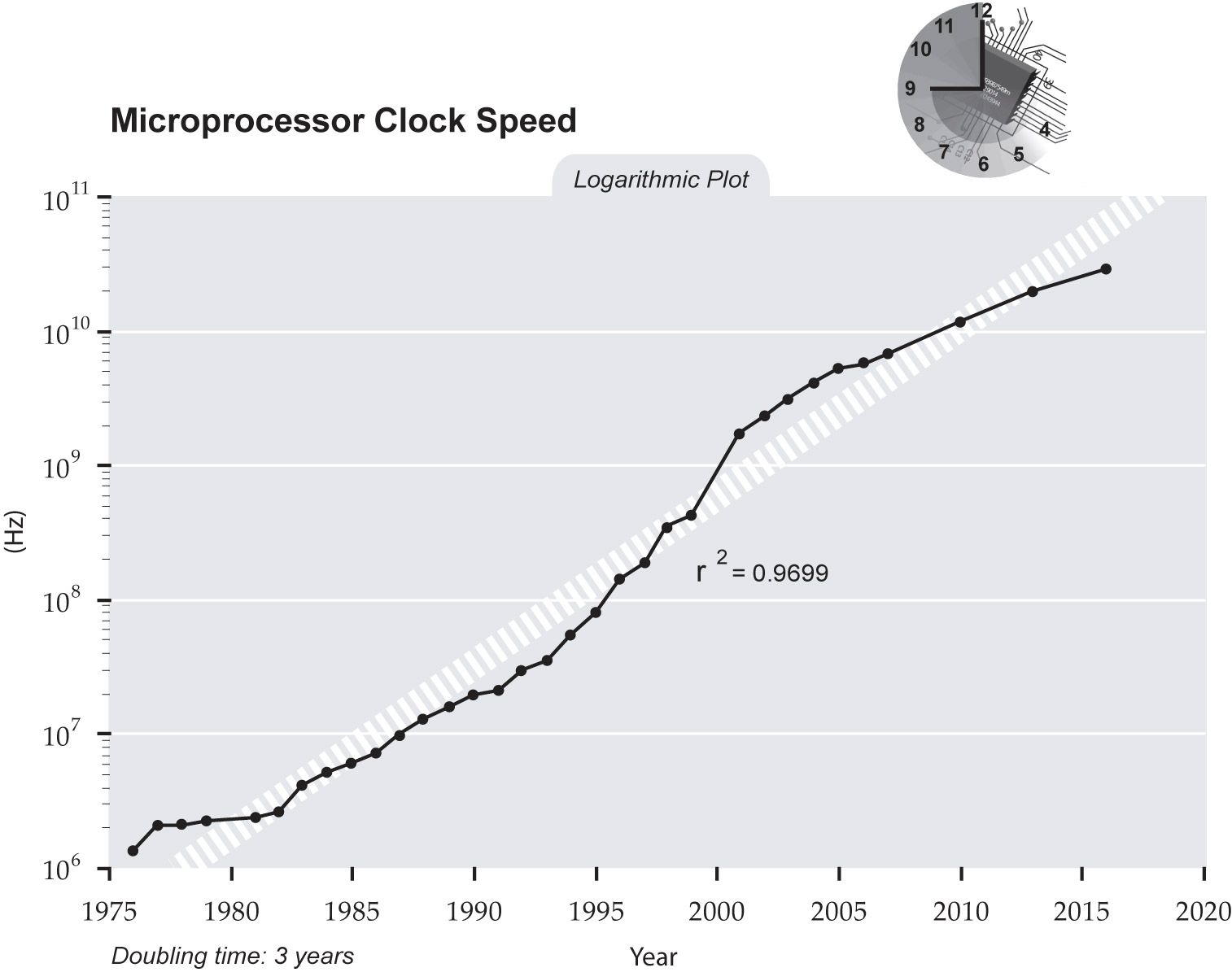

Clock Speed

Clock speed is another metric that can serve as a performance indicator. The clock of a computer continually sends electrical pulses that increment the next operation of the CPU. The amount of work and efficiency can vary among computers, but generally speaking this is a good indicator of how fast the computer is operating and performing calculations. 1 Hertz (Hz) represents one pulse per second, which would be equivalent to a fast human performing an addition operation. Early computers were measured in Megahertz (millions of cycles per second), and computers today run at Gigahertz (billions of cycles per second).

Data Storage

That storage would be enough to store one picture from a smart phone today. Today, a 2 TB portable hard drive is a click away for ~$50 on Amazon, and the largest SSD's have ~100 TB storage.

II - Exponential Compute and Storage Price Decreases

Just as performance of computers has increased, the costs of the various parts of computer and memory/storage have - and continue to - exponentially decrease.

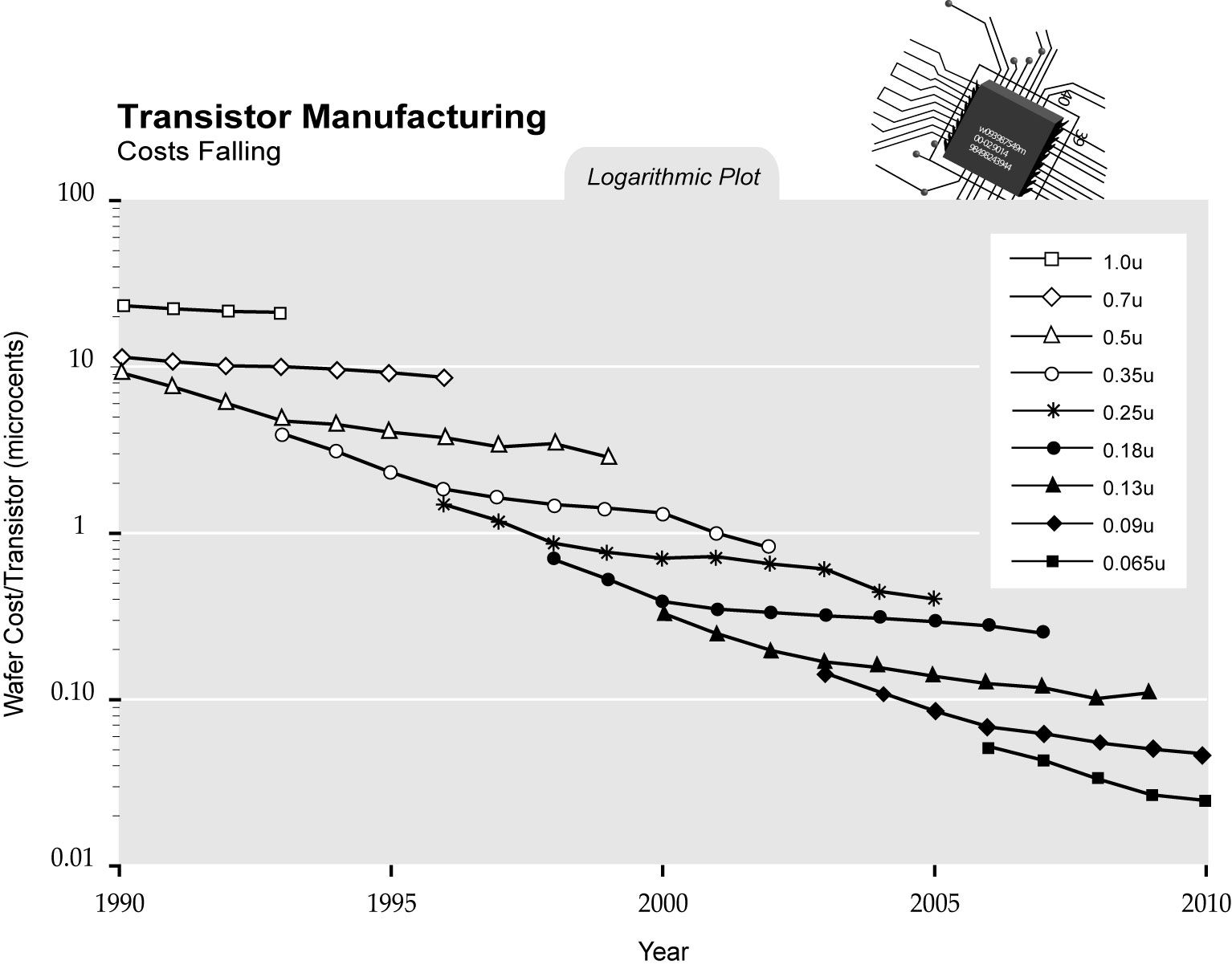

Price of Transistor Manufacturing

Just as we can fit exponentially more transistors into an integrated circuit, the same transistors continue to fall in manufacturing price as evidenced in this log scale chart from Ray Kurzweil (The Singularity is Near):

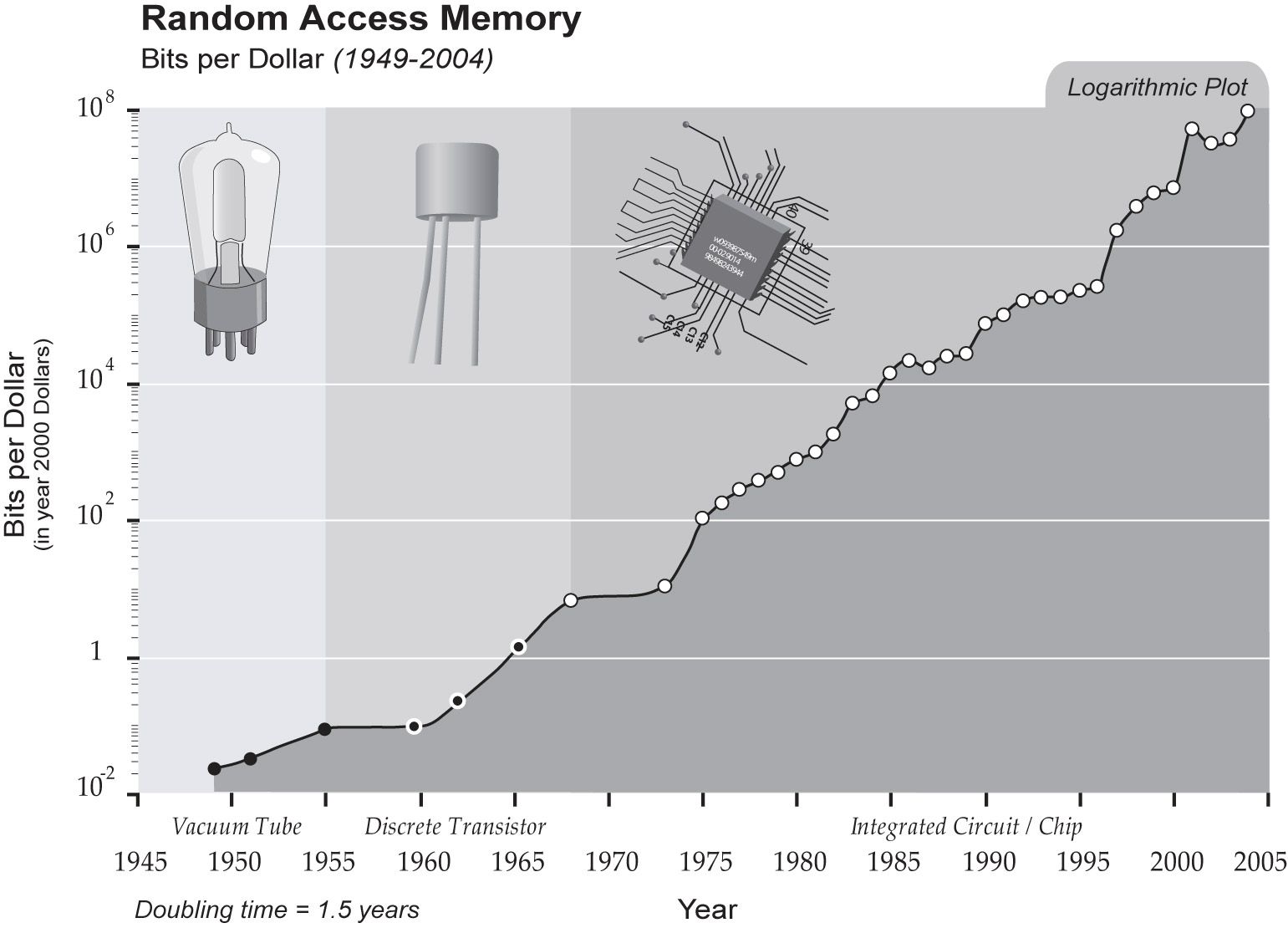

Price of Memory

Just as transistor manufacturing costs have decreased, the costs for data storage and random access memory continue to fall as evidenced in the increase in bits per dollar of RAM:

III - Exponential Productivity Gains

How have computers increased productivity? At a high level, we know GDP (and GDP per capita) have increased since the inventions of electricity and computing. Furthermore ITs share of GDP continues to grow:

Computers continue to get faster and cheaper and take over a larger portion of the economy. And we have become more efficient at how we make use of computers. The number of professional programmers, the availability of various libraries (or lego like building blocks of programs), and the facilitation of knowledge within the software engineering profession all continue to grow at a significant rate as evidenced by the statistics below:

Number of Professional Programmers

The IBM & Airforce SAGE project employed ~20% of all programmers in the 1950's.

- In 2013, there were approximately 18.2 million software developers

- In 2021, it is estimated we have 27 million software developers worldwide.

- By 2040, it is estimated we will have 45 million software developers worldwide (70% increase)

Open Programming Libraries Available

The first computer programs were written on a piece of paper as pseudocode, before being translated carefully onto punch cards. Now, libraries and pre-packaged programs are a click and download away. Millions of lines of programming that have already been completed, are free and available for reuse across new applications being developed.

This paper analyzes growth in open source software (OSS) in the late 90's through the early 2000's to see exponential growth in lines of code added (I know - not always a great metric) and number of projects. Open Source software, number of GitHub projects and accounts, and the commercial success of OSS continue to grow.

Programming Community - Research & Q&A

Stack Overflow is one of the most widely used hubs for Q&A and sharing resources among developers. As of 2021, approximately 7k new questions are asked daily (during the workweek), another ~7k answers are provided, ~20k comments are added, and almost ~9k new developers sign up daily.

What would have once involved reading hardcopy reference manual books and punching index cards is now a quick google search and stack overflow question away from a solution. We invented computers, programmed them, and networked them together - and now we have an exponentially faster (and growing) feedback cycle to learn, create new programs, and solve programs (via software engineering). The computing super cycle is already here and well underway.

Be sure to subscribe to the Exponential Layers newsletter and YouTube channel to get all updates on new videos and articles.

Comments ()