Networking and Internet Fundamentals - Connecting Every Computer

Because of the internet, we are already in the metaverse. The internet is a massive infrastructure and set of protocols coordinated and decentralized among private corporations, governments, and individuals.

The idea of the metaverse keeps being thrown about. When will AR/VR take over? What is the future of web3? Will AI take over?

Because of the internet, we are already in the metaverse. The internet is a massive infrastructure coordinated and decentralized among private corporations, governments, and individuals. And all of these parties (and the more than 3.5 billion people with internet access) are able to communicate at the speed of light using a layered series of protocols that have their roots back to the original ARPANET in the 1960's. (image in main page Photo by FLY:D on Unsplash)

These protocols and the history of how the internet came to be are as relevant as ever as we look at patterns underlying the transition to web3 and crypto. Only in the last three decades has the world wide web taken shape, and the pace of change continues in exponential fashion. The early days of the two minute dial up on the family phone line to log onto AOL have long been replaced with gigabit fiber and networked space satellites.

Today, the internet is a fundamental layer of society on top of energy and electricity. The networking of computers (which have improved exponentially) through all of the communication infrastructure and protocols of the internet form the basis of all aspects of modern society - finance, banking, ecommerce, communication, social media, blockchain, crypto, web3, AI. None of these exist in their modern form without the internet, and all sit as abstraction layers, applications, and extensions on top of the internet layer.

We all know what the internet is - to be reading this article requires an internet connection. But what goes on after you plug in your modem and log on? In this article we explore:

Part I - The History of the Internet - what were the intentions and ideas of the early internet? How did it develop into what it is today?

Part II - The Physical Infrastructure of the Internet - how do all of the pieces of the internet work together? What is the internet backbone, and how do ISPs and LANs all operate together (and what are those terms)?

Part III - Networking and Communication - how do all of the devices on the physical infrastructure of the internet talk to each other? What are the various protocols of the internet and how do they work?

Part IV - Domain Name System (DNS) - how do computers find and talk to websites?

Part V - Protocols, Standards, and Governance - how decentralized is the internet? Who manages all of the protocols and how have they evolved over time? What can we learn from this evolution that applies to the next iterations of the internet?

Part VI - Macro Trends - how exponential has the growth of the internet been? What have been the impacts on society?

This article is meant to be a primer on the internet. It acts as a 30k foot overview on important topics with some sections and opportunities to dive down one level to appreciate some of the details of this massively complex architecture that makes up one of the most important layers of our world today - all with the goal to give some baseline context for future conversations of web3, crypto, AI, and our continued exponential change.

Part I - History of the Internet

The origins of the internet are only two generations old. In that time the internet has grown from four connected computers, to the worldwide infrastructure with billions of connected devices today. The internet today is an immensely complicated network of computers, hardware, and global telecommunication infrastructure. But the architecture, design, and protocols that were developed out of the Defense Advanced Research Projects Agency (DARPA) and first conceptualized in 1962 still hold true today, and the history and development of the internet offers a fantastic insight into how the modern system works today.

Two primary motivations drove the research of the early internet at DARPA:

- How could a computer network be used for redundant fault tolerant communication for the military?

- Could the resources of a computer (which was very expensive) be shared more effectively among multiple people?

Rewind back to the year 1962 - computers are still massive mainframes that take up entire rooms.

Programming functionality is limited to punch cards and early assembly languages. It was at this time that J.C.R. Licklider of MIT wrote one of the first known memos discussing his “Galactic Network” concept, where he envisioned a globally interconnected set of computers through which everyone could quickly access data and programs from any site. Mr. Licklider convinced his colleagues (and successors) at DARPA to help him build out this concept and the rest is history...

1964 - The first book on packet switching theory is published. This idea of breaking up a message into many smaller packets that can be transmitted across a network (and then reassembled) is a fundamental part of the Transport Layer of the Internet (discussed further in this article).

1965 - A TX-2 computer in Massachusetts is connected to a Q-32 computer in California with a low speed dial-up telephone line creating the first (however small) wide-area computer network.

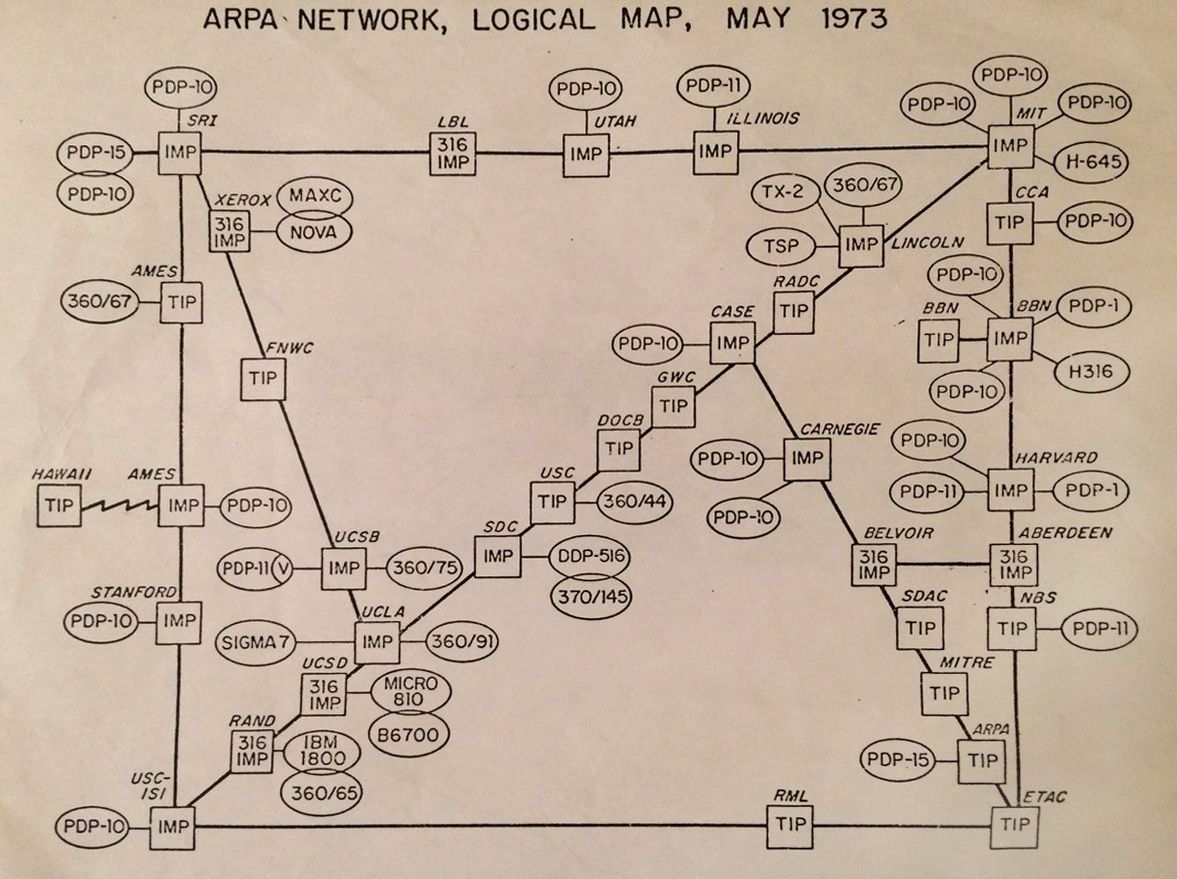

1969 - Four host computers were connected together into the initial ARPANET, forming the first internet.

1972 - Electronic Mail (Email) was first introduced.

1972 - The first concepts of Transmission Control Protocol (TCP) and Internet Protocol (IP) are introduced. These two protocols (to this day) form two of the most important layers of the internet (discussed further in this article).

1973 - Ethernet technology is invented, forming one of the most important layers for physical data transmission (also used today)

1983 - The Internet grew so large that manual addressing of machines on the network was no longer possible. The Domain Name System (DNS) was invented.

In 1973, the ARPANET was a network of some 50 networked devices. Today there are some 22 billion devices connected to the internet.

While the protocols, governance structure, and device and physical infrastructure have greatly evolved over time, the original ARPANET (internet) network and its concepts live on. Only more (a few billion) devices have been added onto the network to make up the internet we know today.

Part II - Physical Infrastructure

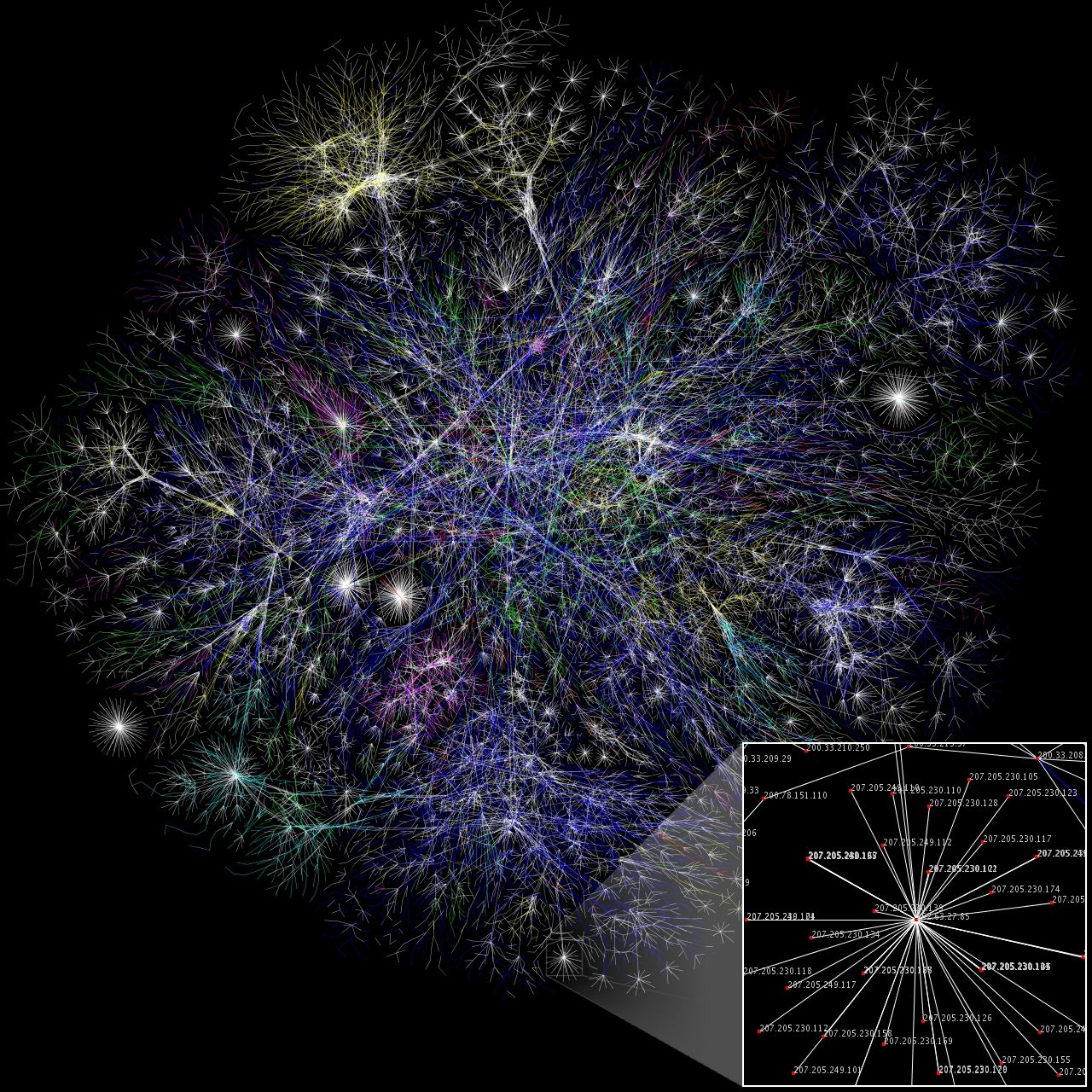

At its core, the internet is just a network of connected cables and hardware, in which devices communicate with each other over specific protocols. The architecture of the internet allows for any computer or network to be added to it and maintain the ability to connect from any node (computer) on the network to another. This architecture enables what was just 4 original ARPANET computers to start, then ~50 in 1973, and now billions of devices today to communicate with each other. And all of this communication is facilitated through physical mediums outlined below.

Computers process binary data through 1’s and 0’s, which are represented as electrical signals within the machine. Communicating data across a network follows the same pattern, and in this case the transmission of data from one machine to another is carried as an electric current across a physical wire.

(Ethernet) Cables: Cables are the lowest level of the physical infrastructure of the internet. Ethernet has evolved since its creation in 1980 as the standard for transmitting digital data, and encompasses coaxial, twisted pair and fiber-optic physical media interfaces, with speeds from 1 Mbit/s to several hundred Gbit/s. CAT-5 cables are a typical example of an ethernet cable. Today fiber optic cables can transmit data at speeds of gigabits per second.

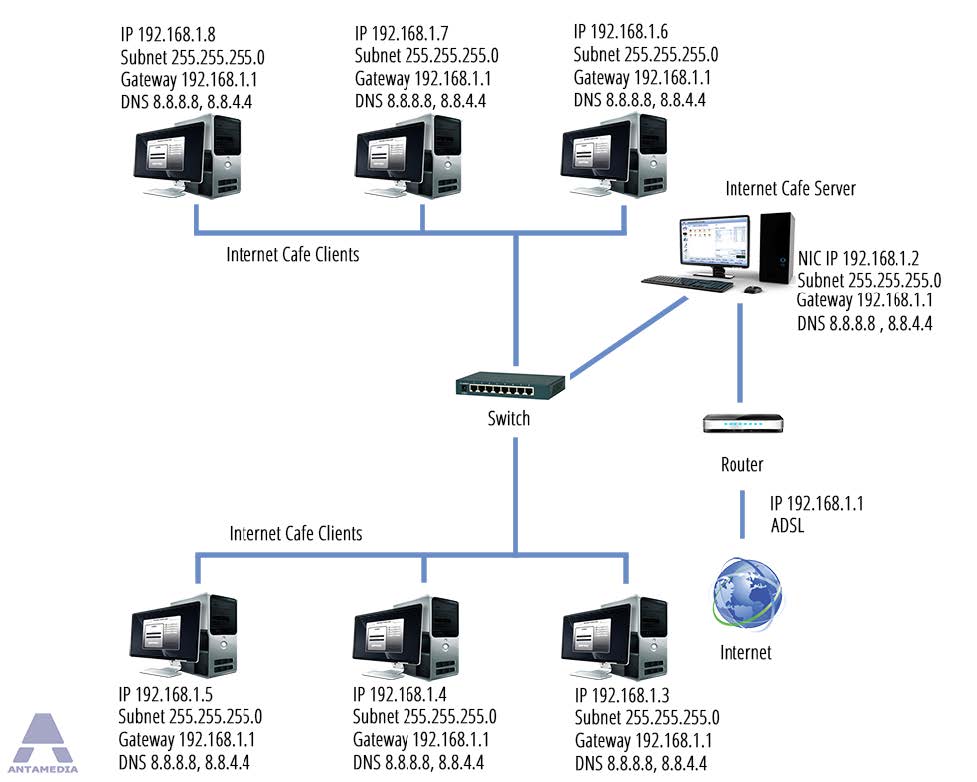

Network Interface Card (NIC): A Network Interface Card is the physical computer hardware component that sends and receives electrical signals and interprets the voltage changes as 1’s and 0’s. Each NIC also has a globally unique MAC address that identifies it within a local network. With a NIC (in each computer) and an ethernet cable connecting the two, binary data can be transmitted between two connected computers by emitting voltage differences and receiving and reconstructing those varying electrical pulses.

Switch: If each computer required a direct wired connection to every other computer on the network, the internet would be limited to a handful of machines. Switches and Routers are the hardware that enable vast networks of computers to talk with one another. A switch connects a network of computers together so that each can talk to each other (through the switch) or to the switch itself. A switch will come to recognize the address of each connected machine and facilitate inter device communication.

Router: Routers are the hardware that connect switches together - thereby enabling the connection of multiple switches (and other routers), and forming the backbone equipment of the internet. Routers hold a list (route table) of all routes between each other. This self discovery process and the algorithms/protocols enabling this communication mapping is the beauty of the internet (and discussed in further detail in the next section).

Modem: Modems are the short name for modulator/demodulator. These devices take an electrical signal (representing a 1 or 0) and translate it into an analog signal needed to traverse phone lines. A Modem is the device that connects a networked set of devices (through switches and routers) to the Internet Service Provider (ISP)

But what about my home internet router? Where is the switch? Where is the modem? Most home routers combine a switch, router, and modem into one hardware device. On the back of the router are various ethernet ports, the device will have a wireless access point and has a modem that allows for all signals to be sent onward through the phone lines.

What about Wifi? Routers allow for wireless connections through wireless access points. Switches cannot accommodate wireless connections.

How does a Local Area Network Connect to the Internet?

At this point we have enough equipment to create a local area network (LAN) where all of the devices could connect with one another. This is a good start, but for everything to be truly valuable, we need to connect this LAN to the broader ‘internet’.

For most of us, we see this happen after our local Internet Service Provider (ISP) comes by (if we are lucky within a four hour appointment window), and they plug in the router and modem to the outlet into the wall. Our home network (basic LAN) of a few computers and devices is now able to connect with the outside world - via this new connection to the Internet Service Provider (ISP).

But what is the ISP doing with our data? And how do they offer an instantaneous way to connect our new LAN with the wider world? Again comes a broad network of networks and a new layer of abstraction. To reiterate, the internet is a network of networks, which at its base is a series of interconnected cables. No one government or entity owns the internet, and rather ISPs and governmental entities come together to provide the equipment (cables and routers) to interconnect everything. This is known as the internet backbone.

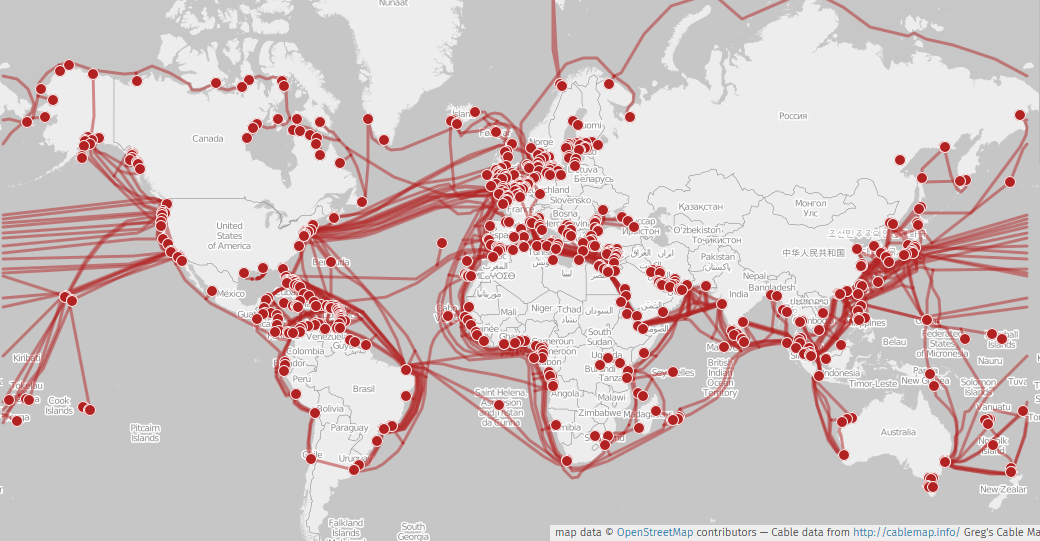

This is a map of all underwater cables (note these are just underwater and connecting the continents together- ‘on land’ cables are far more dense all over the world).

The Internet Backbone

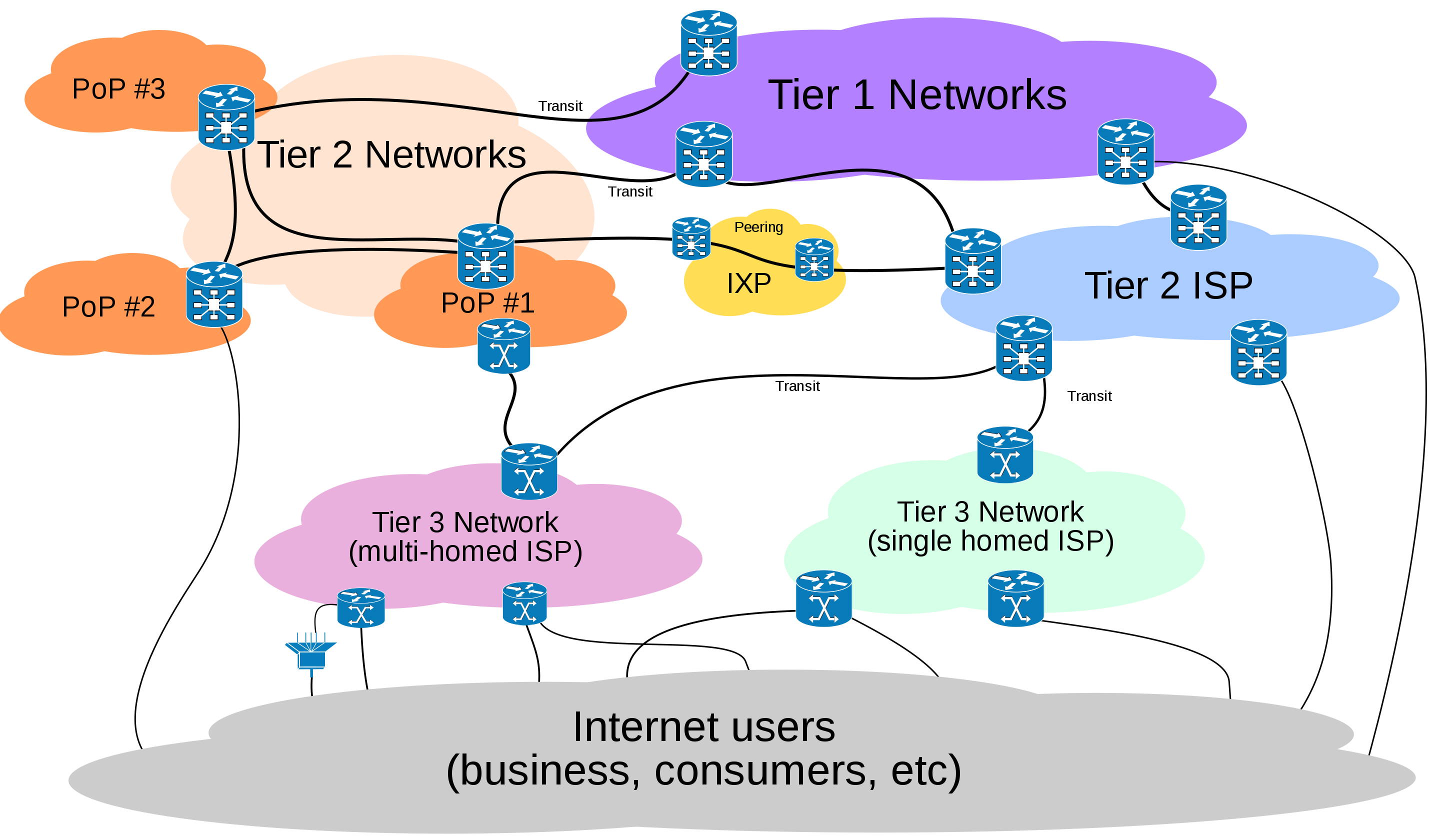

Internet Service Providers (ISPs) all work together to share data (packets) across their network. ISPs build physical infrastructure (cables, routers), work with other ISPs to facilitate data transfer (and agreements with other ISPs), and in many cases provide end customers with internet access. ISPs are categorized into three types (Tier 1, 2, and 3)

Tier 1 Providers are the backbone of the internet. These providers can provide an end to end connection for any message. Examples of Tier 1 providers include AT&T, T-Mobile, Lumen, and Deutsche Telecom.

Tier 2 Providers provide connection to the internet among a region and interface with consumers to provide connections directly to LANs (aka households and businesses). Tier 2 ISPs own and provide infrastructure (cable, fiber, and routers) within the region in which they operate. Examples of Tier 2 ISPs are Comcast and Vodafone.

Tier 3 ISPs do not provide any equipment and only interact with consumers.

In this pyramid hierarchy, ISPs will contract with other ‘peer’ ISPs and higher Tiers (ie Tier 2 will contract with Tier 1) so as to use their equipment (end to end communication channels) to route data. These transmission agreements are typically not found easily in the public, but various agreements between these networks exist and provide guidelines on how each ISP will ‘peer’ and share resources to ensure end to end delivery of network data across the internet.

Routing protocols exist in order to monitor and transfer data across the borders (Autonomous Systems) of the various ISP networks.

In addition, large cloud computing vendors and web companies (Netflix, Facebook) contract and peer directly with Tier 1 and Tier 2 ISPs. Content Distribution Networks (CDNs) such as Akamai serve as a distributed network for caching and serving files and other internet content to alleviate traffic along backbone hotspots and ensure fast delivery of packets to requesting devices.

This completes the full circuit, and the protocols (below) allow any person or entity to create a new LAN and connect to the ‘internet’ backbone through a layer of ISPs. This distributed network, the agreements between various ISPs, and the routing infrastructure and protocols of the internet ensure that any computer or LAN can access any other computer across the internet.

Part III - Networking and Computer Communication

So far we have only touched on the hardware and physical components of the internet. The vast networks of cables, switches, routers, modems, and communication channels can connect computers across the globe.

- How does each computer and router know how to participate in this network?

- How do the series of 1’s and 0’s transmitted from your computer get routed and rendered on another computer?

- How does the response make its way back to you?

The answer is a very complicated set of protocols and rules that all nodes and hardware follow. This adherence to protocols has allowed for the internet to grow from some 50 nodes to billions of connected devices all in a decentralized manner.

The Open Systems Interconnection model (OSI model) is a conceptual model that characterizes and standardizes the communication functions of a telecommunication or computing system without regard to its underlying internal structure and technology. Its goal is the interoperability of diverse communication systems with standard communication protocols.

The OSI consists of 7 layers, with each layer only provisioning functionality to the layer above it. In this manner, and as we will see, each layer can accommodate multiple implementations and can be broken down into specific modular communications.

Layer 1 - Physical Layer

The lowest level of the OSI model is all about how data is transmitted between two network devices. Every device reads binary (1’s and 0’s). How this binary data is transmitted through changes in voltage over a cable and the protocols that the two machines share to make this happen encompass the physical layer. Ben Eater gives a fantastic walkthrough of the synchronization, rates, and various techniques (Manchester encoding) that occur to ensure that two computers can agree upon how to transmit and receive data at the physical layer.

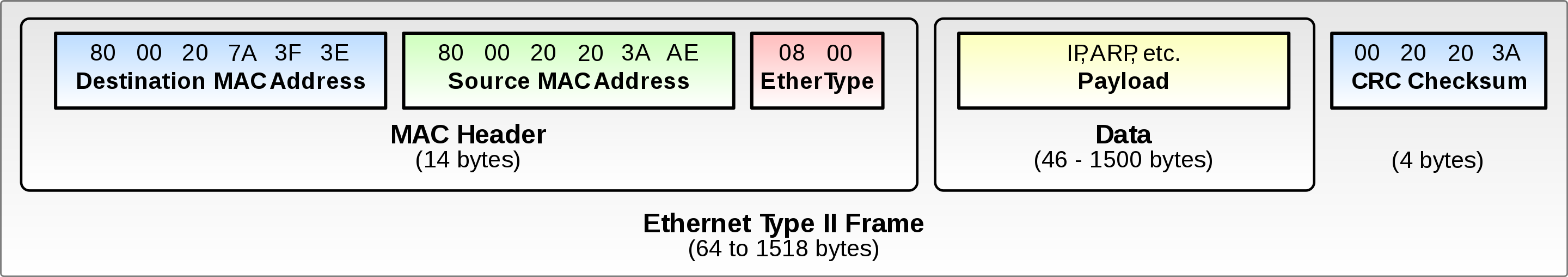

Layer 2 - Data Link Layer

Layer 1 allows for a protocol of transmitting and receiving bits. But how do we encapsulate those bits and their electrical pulses and identify each node on the network? This is where framing and address identification come into play. The Data Link Layer establishes protocols for how to ‘frame’ a series of binary data and identify the nodes communicating with each other. Every device is assigned a unique MAC address by its manufacturer. When attempting to communicate with another networked device, the Data Link Layer provides protocols to ensure that:

- Binary data and the electrical pulses can be encapsulated into addressable frames

- Devices add headers to frames that include physical addresses.

- Any sort of collisions (multiple computers connected to one cable) or errors (a frame’s data not fully delivered) are handled through various protocols and retried.

With these protocols in place, the Data Link Layer can direct the traffic within a local network (not yet the internet) and handle collisions and errors from the physical layer.

Layer 3 - Network Layer

The Network Layer defines the protocols for communication between local networks. Each manufactured computer device has a hardware (MAC) address. However, the internet operates above this level using the Internet Protocol (IP). At this level every participant receives an IP address. This distinction of identification addresses at separate levels adds a layer of encapsulation and also ensures that communication can occur within networks (LANs) at the lower layer (without the internet being required).

Since every participant on the internet requires an address for routing purposes, one of the first protocols in the network layer is the Address Resolution Protocol (ARP). This protocol enables a mapping between a computer's physical (MAC) address and its Internet Protocol (IP) address.

How does a new device receive an IP Address? IP addresses can be static or dynamic, but for personal computers, phones, and most devices, an IP address is assigned dynamically by the ISP providing internet access. IP Addresses must be unique and are managed in a hierarchical and decentralized fashion through the IANA, which delegates to five regional governing bodies, which assign blocks to ISPs (which in turn provide address assignment to devices on their networks).

So now we have a computer connected to the internet with a physical address and an IP address. And we want to get data from a remote computer (also connected to the internet). How does the request make its way through the network? The request needs to travel through all of the interconnected networks (of cables, routers, and other hardware provided by ISPs discussed earlier). And the ability to ‘find’ another IP address across this vast network is the job of routers. Routers exist at each layer of the ISP hierarchy. Through complex algorithms, Routers will build and populate Route Tables dynamically as new devices join the network. Through this build up, and algorithms for finding shortest paths, a packet of information can traverse all the way from a LAN to a local ISP to the internet backbone, to another ISP, and back down to another LAN on the other side of the globe.

With billions of devices connected to the internet, these routing tables have become massive, and further sub protocols and algorithms continue to be refined and new technology introduced in order to facilitate efficient network passing.

Layer 4 - Transport Layer

At this point we have an entire communication stack that allows for computer to computer connection over a global network. But how do requests and their associated information make it across the network efficiently? If we were to serve a gigantic file from one computer to another and route it, the request would be too large. The Transport Layer introduces further protocols for breaking up requests and responses into packets. Two well known protocols at this layer are TCP (Transport Control Protocol) and UDP (User Datagram Protocol).

At a high level, TCP manages the communication and reliability of how packets are created, addressed, and sent across the network layer - and then recombined at the receiving device’s end.

TCP ensures a strict communication hierarchy, with error handling and reordering of packets. So if you are making a bank transaction or sending an email, your computer and the bank or email server will communicate via this protocol to guarantee that packets are all created, routed, and reordered as a part of the transaction.

UDP has a less strict hierarchy (and a faster connection) and offers a ‘best attempt’ at packet delivery. This is more useful in an application like Skype video or streaming online video game, where a dropped packet or piece of data (as a percent of the total) is not as necessary.

Layer 5 - Session Layer

The Session Layer is a thin communication layer that opens and closes connections from the presentation layer to the transport layer. For example, when we visit a website in our browser, our browser's software opens and maintains a session with the remote web server.

Layer 6 - Presentation Layer

Layer 7 - Application Layer

The Application Layer may be most familiar to all of us as we see this end result in our daily use of the internet. Our web browser (like Chrome or Firefox) is an application that uses protocols such as HTTP to render the contents of a web page. Other application protocols include File Transfer Protocol (FTP) and Simple Mail Transfer Protocol (SMTP). All web applications (like Gmail or Filezilla) are software applications that encapsulate all of these protocols behind a UI in a desktop or web application.

So where are these layers?

These layers and their descriptions just scratch the surface of the complexity involved at each layer. However, the power of these protocols and standards at each layer offer encapsulation and abstraction up the chain. And with the combination of these layers and their protocols in action, we go all the way from a web request down to the electrical voltage that is transmitted between devices, and all the way back up the other end of the stack.

But where is the code for these layers? Do these protocols live on every computer or device? The short answer is ‘Yes’.

In the 1980’s when these layers and protocols were being established, operating systems continued to be developed, and some *extremely talented* engineers and early internet and computing pioneers programmed these protocols into the operating systems of computers.

For example, both Windows and Linux have implementations of each of these protocols in their respective operating systems.

And for how an operating system is boot loaded into computer memory and then able to call into various code paths and programs can be seen below (Assembly Code can call into C code).

All of this seems so complex...does every software application need to implement these protocols in its stack?

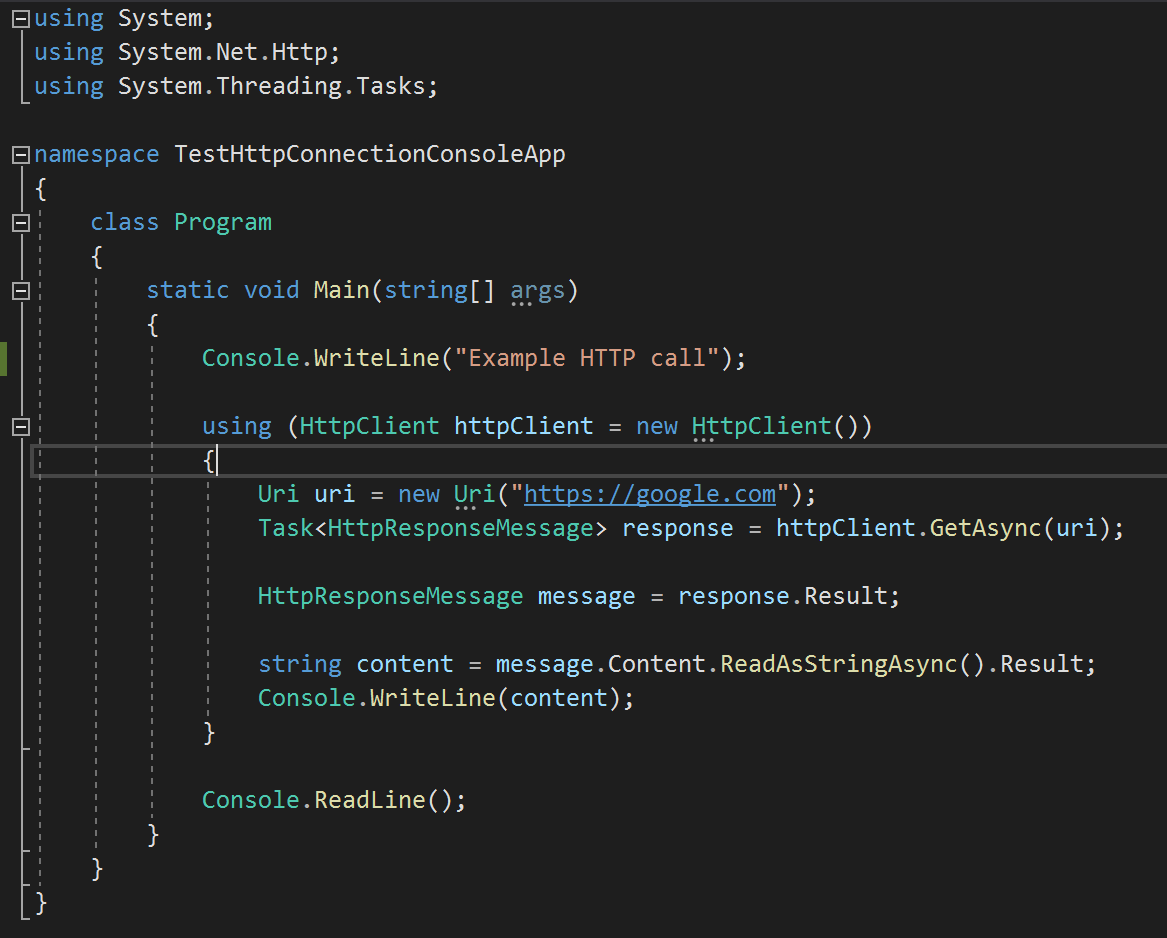

What is absolutely astonishing is the continued abstraction involved in modern computer programming. When writing a web application, nearly all of these layers and their protocols are already provided as libraries. Most modern programming languages offer libraries that completely abstract away all of the details of implementing these protocols so that by the time the code is compiled and executed at run time, all of the details automagically work.

For example, a ‘using System.Net’ line of code in .NET pulls in all underlying libraries so that initiating an HTTP call is just a few lines of code and all of the underlying details of session management, TCP/IP, and the lower layers are hidden from view.

Part IV - Domain Name System (DNS)

We have covered a lot of information so far on the history of the internet and how it was formed, the physical infrastructure and sets of cables and other equipment that make up the backbone of the internet, and the various protocols that devices use when talking with each other over the internet.

But what we haven’t yet talked about is how do websites work? How is a site like Google or Instagram something we can type in on our browsers (or mobile apps) and receive a response. What are the protocols that tell our computer how to reach this site? Enter the world of DNS.

DNS can be thought of as an address book for the internet that maps an IP address to a human friendly and memorable name. As an example google.com maps to 142.251.34.14 (cmd > ping google.com).

This process started all the way back in the early days of the internet at DARPA. Elizabeth Feinler and Jon Postel manually maintained a hosts.txt file on a server that mapped the computer addresses on the network to their IP addresses. For computers to join the network, the owners would have to make a phone call to DARPA to ensure their computer addresses were added to this file. Over time, more and more computers joined the network and this manual process had outgrown itself. The decided upon standard for replacement was DNS.

Today DNS is a complex and distributed set of servers and protocols that allow for mapping between human readable names and IP addresses so that we can type in some sort of memorable domain and be routed to the correct place.

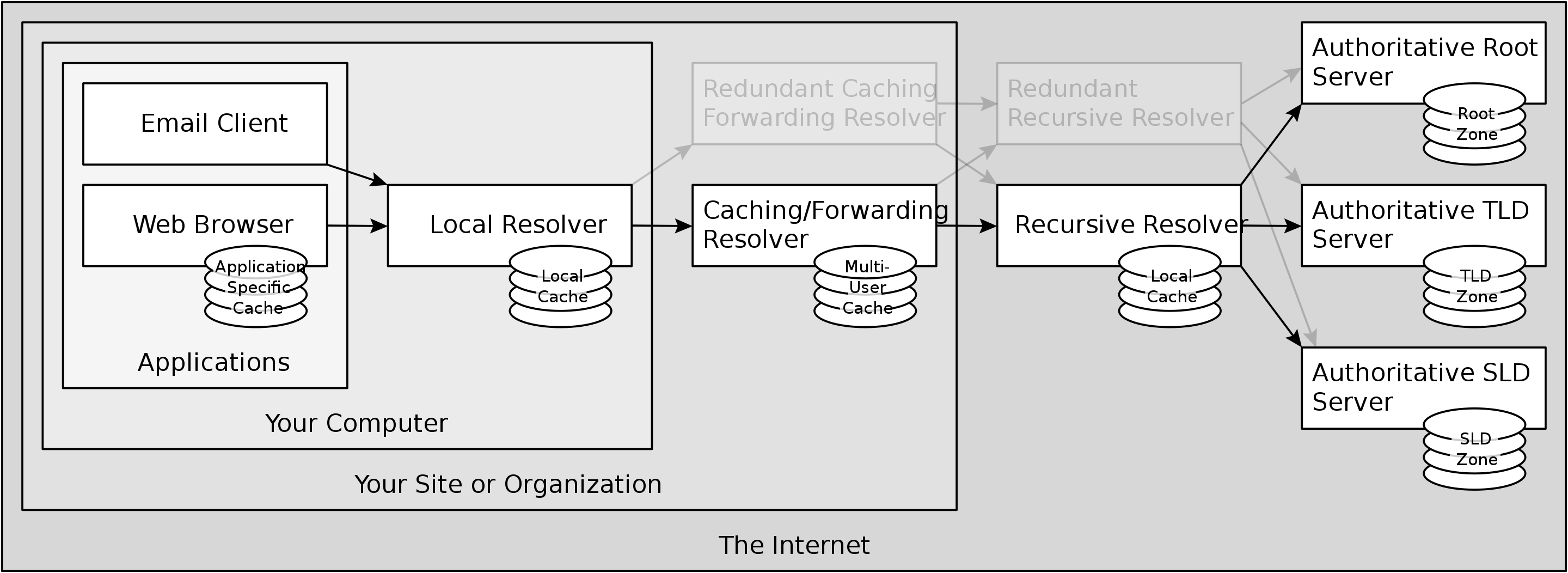

DNS is managed in a tree structure and the resolution process occurs all the way from a local machine cache all the way through a number of cached layers.

At the base layer are the authoritative servers which operate as their name implies the final authoritative list of all mappings. Today there are 13 sets of root name servers (with hundreds of load balanced physical machines) distributed across the world. These servers are managed by 12 various organizations through a parent organization IANA. The full list of these servers can be seen here.

It is important to note that DNS uses the same OSI models as every other computer. DNS is an application level protocol and as such relies on all of the layers below it. As such DNS information is exchanged over the network just like any other packet of information, with headers and lower level protocols.

What happens when I purchase a domain?

Sites like GoDaddy serve as a consumer interface to facilitate the purchase and registration of domain names. GoDaddy (or another registrar) will then take care of all back end operations to file and update the ‘official’ distributed database maintained through ICANN and the 13 independent authoritative hosts.

With this distributed authoritative naming mapping, the internet has a robust and decentralized manner for offering named domains into the routing schemes outlined earlier.

Part V - Protocols, Standards, and Governance

In this article we have only scratched the surface of the hundreds of protocols and their evolution out of the early days of DARPA. This evolution of protocols and the contributions of early internet pioneers, government agencies, corporations, and (likely) millions of people over the years has led to the greatest decentralized and evolved interface protocol ever seen. The internet (and computers) is the base layer for all future innovation in AI, crypto, and web3. Just as important to understand as we look to gauge the future are the governing protocols, principles of decentralization, and the evolution of how people have collaborated together to make it all happen. A few overriding themes of the internet through its evolution that have made it so successful:

1) Open Protocols, Scalable Architecture & Free Entry of Participants

All of the early internet pioneers saw the great potential of the internet, and formulated the design based on completely open protocols (all bullets below from the history of the internet)

- Each distinct network would have to stand on its own and no internal changes could be required to any such network to connect it to the Internet.

- Communications would be on a best effort basis. If a packet didn’t make it to the final destination, it would shortly be retransmitted from the source.

- Black boxes would be used to connect the networks; these would later be called gateways and routers. There would be no information retained by the gateways about the individual flows of packets passing through them, thereby keeping them simple and avoiding complicated adaptation and recovery from various failure modes.

- There would be no global control at the operations level.

This interview with Vint Cerf (the inventor of the TCP and IP protocols) gives some insight into what these early internet pioneers were thinking as they rolled out the internet. This focus on protocols that did not restrict or make solutions closed source has allowed the internet to grow into the ever present digital layer it is today.

2) New Protocol Adoption

Throughout the evolution of the internet, design decisions and new protocols have been required to support continued growth. Technology has changed drastically since the original development of the internet. Personal computing became widespread, cell phones are now ubiquitous, computers have rapidly advanced in memory and storage, and the internet network has grown from 4 nodes to billions.

To ensure that the original designs and network could accommodate this growth required an open mindset and a collaboration across all stakeholders to preserve the original principles. Working together, we can see several examples of protocols and standards developed together among stakeholders as various upper bounds of the internet were reached:

DNS: The overview of DNS is presented earlier in this article. When the early ARPANET ran up against the limitations of a manual process (of mapping computer identifiers with their IP addresses), a new protocol was developed in tandem with input from the entire community. The management of IP Addresses and domains was distributed and decentralized.

TCP & UDP: when limitations of TCP were seen, a new protocol was introduced and both TCP and UDP were separated from the (OSI) transport layer model. Again from the history of the internet:

“This model worked fine for file transfer and remote login applications, but some of the early work on advanced network applications, in particular packet voice in the 1970s, made clear that in some cases packet losses should not be corrected by TCP, but should be left to the application to deal with. This led to a reorganization of the original TCP into two protocols, the simple IP which provided only for addressing and forwarding of individual packets, and the separate TCP, which was concerned with service features such as flow control and recovery from lost packets. For those applications that did not want the services of TCP, an alternative called the User Datagram Protocol (UDP) was added in order to provide direct access to the basic service of IP.”

Route Tables: Route tables have grown to contain massive amounts of entries (as more and more nodes join the internet). To hep contain and slow this growth, the internet community has developed new protocols to assist with routing algorithms.

IPv4 and IPv6: original internet addressing had IP addresses with 32 bits (IPv4), which allowed for ~4 billion unique addresses. At the time, no one saw that this many devices would be connected to the internet, but hindsight being 20/20, we know that is not enough. Again the internet community came together to introduce a new protocol and standard for 128 bit addresses (IPv6) to ensure no unique address collisions.

3) Free Participation on Standards and Protocols (Request for Comment)

“In 1969 a key step was taken by S. Crocker (then at UCLA) in establishing the Request for Comments (or RFC) series of notes. These memos were intended to be an informal fast distribution way to share ideas with other network researchers. At first the RFCs were printed on paper and distributed via snail mail. As the File Transfer Protocol (FTP) came into use, the RFCs were prepared as online files and accessed via FTP. Now, of course, the RFCs are easily accessed via the World Wide Web at dozens of sites around the world.”

We have seen a lot of change in how people work with the onset of WFH through the pandemic. 50 years ago, however, before the internet was invented - the pioneers of the internet created an effective and quick feedback loop for developing and distributing their ideas. And once the internet was developed, it was used as the backbone for this messaging platform.

Examples of an early RFC for DNS can be seen here. And today the standard of RFCs live on, along with a complete suite of email, slack, discord, twitter, reddit, stack overflow - the information transmission lifecycle is faster than ever.

4) Government & Private Enterprise Collaboration

One key to making the internet work was that it was not proprietary to any government or individual. From the fantastic interview provided by Wired, Vint Cerf explains his early motives for the internet design:

Wired: So how did the internet get beyond the technical and academic community?

Cerf: It's just that they (Xerox PARC) decided to treat their protocol as proprietary, and Bob and I were desperate to have a non-proprietary protocol for the military to use. We said we're not going to patent it, we're not going to control it. We're going to release it to the world as soon as it's available, which we did.

So by 1988, I'm seeing this commercial phenomenon beginning to show up. Hardware makers are selling routers to universities so they can build up their campus networks. So I remember thinking, "Well, how are we going to get this in the hands of the general public?" There were no public internet services at that point.

... So I thought, "Well, you know, we're never going to get commercial networking until we have the business community seeing that commercial networking is actually a business possibility."

The collaborative efforts across government, academics, and private enterprise continued with each protocol. (From the history of the internet):

“In 1985, recognizing this lack of information availability and appropriate training, Dan Lynch in cooperation with the IAB arranged to hold a three day workshop for ALL vendors to come learn about how TCP/IP worked and what it still could not do well. The speakers came mostly from the DARPA research community who had both developed these protocols and used them in day-to-day work. About 250 vendor personnel came to listen to 50 inventors and experimenters. The results were surprises on both sides: the vendors were amazed to find that the inventors were so open about the way things worked (and what still did not work) and the inventors were pleased to listen to new problems they had not considered, but were being discovered by the vendors in the field. Thus a two-way discussion was formed that has lasted for over a decade. “

These partnerships continue today across all domains of computer science, through open source software. And this collaboration among government and business is crucial to the continued success of the internet and new layers being added on top of it.

5) Decentralized Governance

Just how decentralized is the internet? We have gone into details on many of the layers, protocols, and hardware that make up the internet. In order for the functionality and protocols to work there are rules that must be followed by all participants, and some centralization is needed for enforcement of rules, standards, and operation of various protocols. These protocols and the governing central entities are outlined below:

MAC Address Assignment: every manufactured device has a unique hardware address that is used when connecting on local networks. This MAC address is assigned by manufacturers, and The Institute of Electrical and Electronics Engineers (IEEE) oversees this number assignment protocol. Virtualization technologies exist on top of this assignment for further anonymization.

Autonomous System Numbers (ASN) & Internet Protocol (IP) Address Allocation: The Internet Assigned Numbers Authority (IANA) is an organization that oversees a variety of internet functions. IANA was formed out of ARPANET early in its conception before eventually growing into its own independent organization.

Autonomous System Numbers (ASNs) are allocated by IANA to Regional Internet Registries (RIR) who in turn manage the assignment of ASNs to Internet Service Providers. There are five regional internet registries who manage the internet functions (largely by continent).

ISPs are allocated blocks of IP addresses from the RIRs. ISPs then assign IP addresses dynamically to devices connecting onto their internet. You will notice that your individual device’s IP address will change as you connect to different networks (LANs) on an ISP.

Domain Name System (DNS) and Authoritative Name Servers - as discussed the DNS is a decentralized system with 12 different entities managing 13 different server groups that provide IP address lookup, resolution, and caching policies.

It is interesting to note that IANA is a function within the Internet Corporation for Assigned Names and Numbers (ICANN). ARPANET was created in US Defense and government, out of a desire to harden communication networks. As it evolved, new organizations and nonprofits were created to distribute control of various protocols (such as ASNs, IP addresses, and DNS name servers). Even still these organizations are all under the umbrella of ICANN, which is a United States not for profit entity.

How anonymous is the internet? How trackable is data?

In this article we did not touch on many important topics of encryption, HTTPS, VPNs and other methods for how secure communication takes place over the internet. At a high level, your ISP can see all internet traffic (what sites you visit) when on their network. However they are not able to see sensitive data over HTTPS (ie they cannot see the passwords entered over a browser to your online banking application). All of these details will be covered in a future article. But the idea that an ISP can throttle certain traffic certainly exists and the concepts of net-neutrality have come into question over the past several years.

In addition the notion of ISPs as local monopolies can be valid as in many areas there is only one option for an ISP. New technologies from Starlink and other companies may add competition to a largely uncompetitive market in certain areas.

So is the internet decentralized? Yes and No. There are a dozen or so Tier 1 ISPs and agencies who own much of the infrastructure. And several distributed organizations manage the centralized addressing protocols necessary for everyone to contribute. So there is some notion of centralization. However, as we have seen since the earliest days of ARPANET, the internet and its pioneers and continued inventors have introduced hundreds of protocols and governance strategies to ensure that the original fundamentals and design principles remain intact and decentralized to the maximum degree possible.

Part VI - Macro Internet Trends

Armed with an understanding of the history of the internet and basic information about the layers and protocols that compose it, we can look at the exponential growth of the internet through three lenses:

- Exponential growth in the underlying physical internet: How fast is the internet? How far does it reach? How efficient is each connected device?

- Exponential growth in network effects: How many users are connected to the internet? How many websites are there?

- Exponential growth in the internet's effect on society: How much time do we spend on the internet? How has it reshaped all aspects of our society?

1) Exponential Growth in the Physical Layer - Number of Connected Devices on the Internet

In 1973, we saw 4 connected devices make up the original APRANET. Less than 50 years later, we see more than 22 billion connected devices, with an anticipated 50 billion devices in 2030.

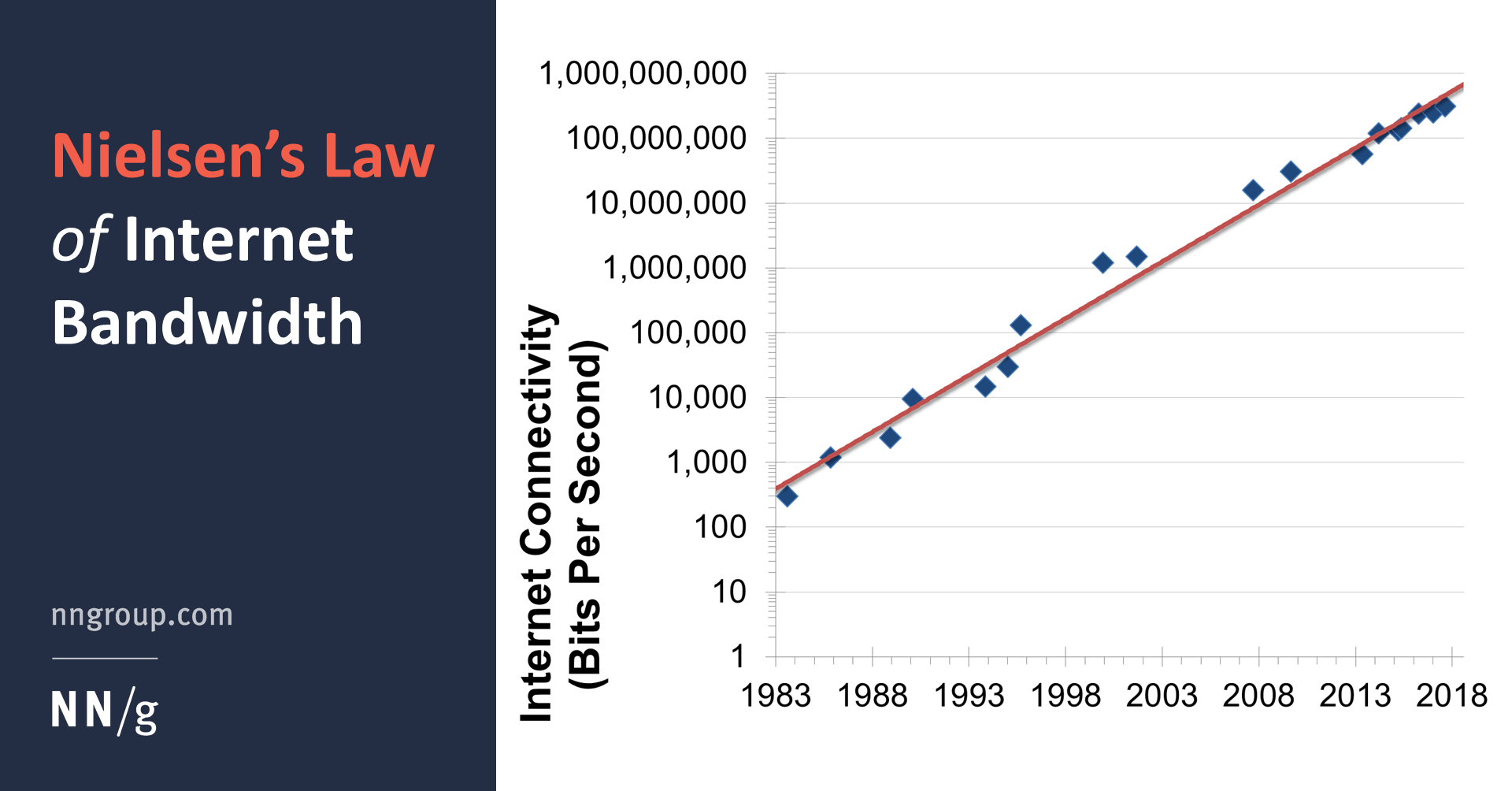

2) Exponential Growth in the Physical Layer - Transmission Bandwidth

The technology that underpins the backbone cables of the internet grows exponentially faster in terms of data throughput (how many bits per second can be transmitted)

The early Ethernet cables of 1983 could transfer ~3 Megabits per second. Today, ethernet cables can transfer data at speeds of ~400 Gigabits per second. This is a 100,000 x improvement in less than 40 years. And for reference, 1 gigabyte is roughly equivalent to the amount of data in your favorite HD half hour TV show from Netflix.

High speed fiber optics and other technologies continue to develop, and some connection technology has bandwidth in the hundreds of terabytes per second.

3) Exponential Growth in the Physical Layer - Cabling (miles of cable)

The internet is a backbone of interconnected cables to transmit data. In addition to efficiency of each cable and its medium for data transmission (bandwidth), the physical footprint of the internet has grown exponentially.

What started as a direct line from California to Massachusetts (~3,000 miles), is now more than 745,000 miles of just underwater cabling connecting the continents.

4) Exponential Growth in the Physical Layer - Connected Device Technology Improvement

Every computer connected to the internet has increased exponentially in terms of compute speed, processing power, memory. In that same time, costs have decreased exponentially for all parts. The previous article here goes into all details.

5) Exponential Growth in the Physical Layer - Consumer Bandwidth (via ISP)

With all of the backbone technology improvements, device enhancement, and physical infrastructure growth, we as consumers see exponentially faster bandwidth in our every day use. Nielsen's law goes back to 1983 and shows a 50% improvement per year in bandwidth for high speed connection.

6) Exponential growth in network effects - Number of People with Access to Internet

At the end of 2016, nearly 3.5 billion people had access to the internet. Even in 2001, at the height of the 'tech bubble', only half a billion people had access. In 20 years, we have seen more than 7x growth in the number of connected users, and now more than half the planet has access to the internet.

7) Exponential growth in network effects - Number of Websites

Today there are more than 1.5 billion websites on the internet. This figure has grown exponentially from the ~17 million websites just 20 years ago (in 2000).

8) Exponential growth in the internet's effect on society - Time on Digital Media

It is no secret that the internet has fundamentally reshaped how we spend our lives. And this graph from World Of Data shows that we spend an average of more than 6 hours a day interacting on the internet.

9) Exponential growth in the internet's effect on society - Ecommerce Share of Retail Sales

And with more people accessing the internet and spending a greater share of time online, retail makes up a growing share of retails sales.

10) Exponential growth in the internet's effect on society - Social Media Usage

One last statistic to round out what are almost obvious trends at this point - just how much people use social media. The graph below is effectively the same as the growth in the number of people using the internet. In many ways the internet is synonymous today with communication.

We know that the internet has reshaped all aspects of society. Hopefully with this high level insight into some additional details of how the internet works we can:

- Appreciate the ingenuity of all of the internet pioneers who set the standards for the internet

- Recognize that embracing this type of technology across private enterprise and government can be beneficial for everyone

- Recognize the enormous complexity of the system and keep that perspective as we look forward to next generation trends such as crypto and web3 and what seems to be 'too complex'

- Understand that the internet is a powerful base layer of modern society upon which much is built in our modern economy

Be sure to subscribe to the Exponential Layers newsletter and YouTube channel to get all updates on new videos and articles.

Comments ()