Cloud Computing - Solving a Trillion Dollar Problem

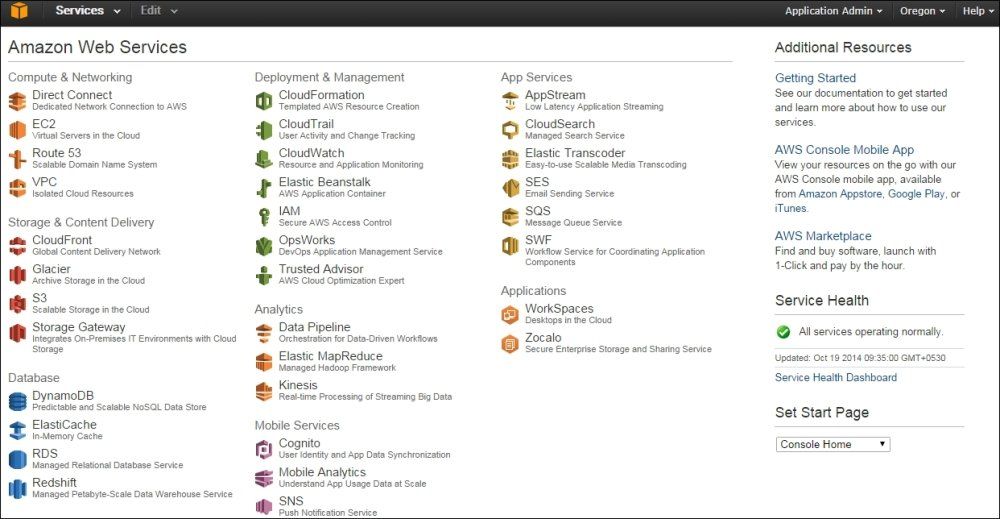

“I’m in love with this slide. We update it but it doesn’t matter”. The numbers in this slide from Amazon Web Services (AWS) VP and Distinguished engineer James Hamilton at the AWS re:Invent conference in 2016 are astonishing.

In 2015, Amazon Web Services (AWS) added enough server capacity to support a Fortune 500 enterprise every single day.

In 2015, AWS operated data centers out of 14 global regions. As of 2021, AWS operates in 26 regions with 84 availability zones. As Mr. Hamilton alludes to in his presentation, it is mind boggling to think about all of the execution and complexity involved in making this a reality:

- Component, semiconductor, and hardware manufacturers must work together to create servers

- Data centers are constructed worldwide - concrete poured, redundant power supplies setup, and fiberoptic network cables laid across the world to connect each data center to the provider backbone

- Trucks back up daily to deliver new servers to the various data center facilities

- Specialized technicians rack servers and connect hardware and networking equipment into the data center

- Software engineers create and deploy code into the data center to abstract away underlying details and make services available via to customers

- Product and Engineering teams create and overlay some of some of the world's most sophisticated algorithms and software service stacks and abstract the details into customizable templates for the largest enterprises in the world to use

Making all of this a reality and executing at this level on a daily basis is an incredible feat, and it is no wonder that cloud computing is now set to become a trillion dollar revenue market within just 5 years.

At the latest re:Invent conference just a few weeks ago (2021), Amazon CTO Dr. Werner Vogels broke out just a few of the groundbreaking innovations from AWS, along with some numbers that highlight the astronomical scale at which AWS is operating.

AWS's Identity and Access Management (IAM) Service - which handles calls from every customer to every service across every region - routinely handles 500 million API Calls every second.

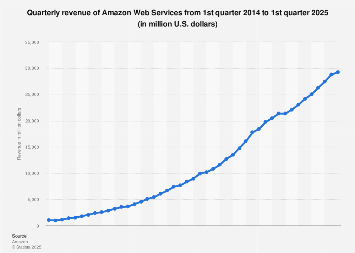

The numbers at every level are simply staggering. The top three public cloud providers - Amazon’s AWS, Microsoft’s Azure, and Google’s Google Cloud Platform (GCP) - control some 60% of the market share. Amazon's AWS 2018 annual revenue was $25 billion. This year (2021), AWS is on track to reach ~$61 Billion in revenue. In it's most recent fiscal year that ended this summer, Microsoft reported revenue of $60 billion in its intelligent cloud segment (which includes Azure). And Google, which started late in the cloud computing market, has already grown revenue in its cloud segment from $5.8 billion in 2018 to what could be nearly ~$20 billion at the end of this fiscal year. (all sources from 10Qs and 10Ks)

What’s just as impressive is that all three of these businesses show no signs of slowing down. In fact, it is just the opposite as each of the major cloud providers innovate and overlap in building out new categories. AWS and Goldman Sachs announced a new vertical specific cloud for financial services built on top of AWS. Microsoft Azure continues to pioneer at new layers of the cloud with edge computing, 5g networks, underwater and space based data centers. Google Cloud Platform is offering unprecedented access to machine learning services at cloud scale.

And with every company moving to take advantage of the cloud, we can easily see the massive dependency graphs. Any time there is an outage at AWS a whole host of internet services fail in some fashion - smart cars cannot unlock, email and chat apps are unavailable, or customers are locked out of online banking applications - all of these examples highlighting just how fundamental cloud computing is within society.

At this point, cloud computing is an assumed exponential layer of our society and it impacts a growing portion of our world every day. It is already such a dominant part of our world, so in this article we will break down cloud computing at the 101 level to understand:

- Part I - What is Cloud Computing?

- Part II - What are the problems that Cloud Computing solves that make it a fundamental layer of society with such a massive investment opportunity?

Part I - What is Cloud Computing?

Everyday we all contribute to or use the cloud in some manner. Any time we send an email, modify a google spreadsheet, scroll social media, stream spotify, or watch a show on Netflix, we rely on other computers to process and return whatever information we requested. Companies today have massive workloads that rely on vast computer infrastructure for processing - running weekly financial reports, calculating delivery or flight plans, or programming a set of robots on a factory floor. But where do all of the computers that perform this activity live? Enter the world of on-premise data centers and cloud computing.

If only Mark Zuckerberg could have deployed the original Facebook code to AWS. The early days of the internet required high upfront capital costs in the form of buying, provisioning, and maintaining physical infrastructure. Setting up servers and infrastructure was time consuming and expensive and consumed the majority of all new project resources.

Andy Jassy and team, seeing this trend on multiple IT projects at Amazon, decided to put all storage, compute, and networking behind a collection of APIs and form the first cloud computing services. In 2006, AWS launched with it’s Simple Storage Service (S3) and Elastic Compute Cloud (EC2), and the rest is history.

What is storage, compute, and networking?

We humans are incredibly resourceful. In our early days of history we would grunt and make hand gestures to convey information and meaning to each other. We eventually began to draw symbols and art to convey ideas. And then at some point we associated sounds with symbols and began to form modern language. Today, every single word, image, and sound can be distilled down to 1's and 0's in binary language. And computers are able to encode and decode all of this and transmit it over networks at near light speed (the internet).

Every time we use a computer, cell phone, or any other 'smart' application, our device issues a request to a server which is somewhere connected to the internet. Through a massively complex set of routing, protocols, and physical cable infrastructure, a server somewhere is able to interpret the request (ie a google search or a request to play a Netflix show) and return that information to our device.

Even your laptop could be considered a server – which at its core is just a computer designed to accept and process requests and then return information. To best contextualize these concepts, take a personal blog for instance - if you wanted to make your blog available to the rest of the world over the internet, you have a couple of options:

(Option 1) Connect your laptop to the internet - it is certainly feasible to connect your laptop to the internet and set it up to run 24 hours a day, responding to any requests that come to your self hosted website. We quickly see the issues here: (Issue 1) your laptop is running 24x7 - if your power goes out or your dog accidentally trips over the power cable, your site is no longer available. (Issue 2) Your blog is a great success, but now thousands of people start to visit your site - your laptop simply won't be able to handle all of this new traffic. Now imagine your website is a global enterprise with thousands of applications, and websites... we're going to need a few more laptops.

(Option 2) Setup a Data Center - a laptop isn't going to cut it when hosting your websites, applications, or compute workloads. So now you invest in creating data center(s). You setup a warehouse like physical structure with all of the power, electricity, servers, and network cabling so that you have a reliable infrastructure that is fully equipped to handle traffic, respond to requests, and manage all of the compute workloads. This is no small undertaking. The upfront costs can be extreme and a lot of planning and specialized technical labor is required to bring a data center online.

(Option 3) Use the Cloud - with the advent of Cloud Computing, someone else manages Option 2 entirely. You ask Amazon, Microsoft, or Google to use their massive data centers and they allow you to rent their servers on an ongoing and on demand basis. They employ thousands of the world's smartest technicians, hardware engineers, and software engineers to design world class facilities and they offer out of the box software and algorithms that are plug and play into your website or applications.

At this point you can probably see the advantages to using cloud computing over your own data centers. But the option to use the cloud was not possible until just over a decade ago, and the early days of AWS offered a much more limited set of functionality than may have been available in your data center. Computers and networking have been around now since the 1940's so enterprises have had more than 50 years with no option except to build data centers before the cloud came along.

Today, Cloud Computing offers incredibly rich functionality and world class features, and solves a host of problems. Let's dive into these problems below.

Part II - What is the problem that Cloud Computing solves?

Problem #1 - Capital Costs and Upfront Provisioning Time

Any new IT project today requires some combination of compute, storage, and networking. As we outlined above, there are really only two ways to accomplish this:

Option number one is to do capacity planning, estimate all of the hardware and infrastructure that is needed, order all of the components, setup the infrastructure (which requires complex technical work - aka $$$), and network everything into the existing data center. And this is just to start the project. From there the product managers and software engineers will work together to create the new application. The engineers will need to write or leverage any (if lucky pre-existing) code that connects the new application with whatever storage, compute, and networking infrastructure was provisioned. They will need to write or setup new testing infrastructure and a deployment suite. Finally they can write the new application specific code and ship the project.

This is a costly process that requires expensive resources. A lot of redundant code needs to be written, and an immense amount of planning goes into upfront procurement, which also requires large upfront capital investments long before any value is delivered (something that finance will surely appreciate). For startups who may not have a data center, the options would be even more limited.

Option number two offers an on-demand model. AWS, Azure, and GCP (or other cloud provider) already have suites of services that can be leveraged from day one. While some level of capacity planning and ‘plumbing and infrastructure’ coding is still required, the software engineers can get started on day one writing application code. This leads to faster time to value for all of the projects, and it reduces any upfront costs that were required for procuring infrastructure and hardware.

Problem #2 - Ongoing maintenance

Continuing on with the example above, in Option 1, we still need to maintain all of the infrastructure we procured - along with all of the facilities that contain the hardware. Data centers are not a fully hands-off experience. We will need to repair servers or network cables as they become faulty, we’ll need AC and cooling for the data center, and we’ll need some sort of physical security setup for the data center as all of the data we are storing is sensitive and confidential.

Cloud computing again mitigates the requirements for any of this ongoing maintenance. AWS, Azure, and GCP employ world class engineers to focus specifically on problems such as cooling the data centers. They have each data center lined with multiple layers of physical access controls and a gated perimeter with security guards. And all of this is abstracted away behind yaml files and console clicks.

IDC estimates *just the provisioning and maintenance savings* at 31% over a five year period.

Problem # 3 - Scaling

But will it scale?

The new application we shipped has become a massive success, and it is clear we will need to add servers and capacity to handle the additional user load. In the on-premise data center option, we are back to the original drawing board - planning and estimating for more capacity, waiting for delivery of the new servers, waiting for our specialized technicians to install all of the hardware, and making sure the software engineers can scale the applications to the new clusters. Hopefully our existing data center can fit all of the new hardware because construction could take a while. Even in the best case scenario here, we lose out on capturing market share and new customers.

Cloud computing, on the other hand, offers on-demand compute and configurable auto-scaling. With cloud computing, we setup the rules for how we want to scale up our application - for example if we see that servers are getting bogged down with processing requests or doing some other work, our cloud provider will automatically add servers to the mix to handle the additional load.

The reverse holds true as well. Perhaps our business has seasonal fluctuations (ie Ecommerce for the holidays) and we expect a surge of traffic for a given hour, day, week, or month. Our cloud provider can help scale up the resources we need, but can also ensure we can scale back down to a normal workload after the surge - in turn saving resource costs. This ability to scale up and down to meet demand is one of the key drivers behind a 62% increase in IT infrastructure management efficiency.

As we saw, Amazon built AWS to solve a multitude of its own problems, and their data on Prime Day is an incredible example of dogfooding their auto-scaling. James Hamilton again clearly outlines the massive cost savings that Amazon can reap - no longer does Amazon (or any company) need to overprovision hardware just for the peak load expectations.

Problem #4 - Failover, Redundancy, and Uptime

For large enterprises and mission critical applications, uptime is an absolute requirement. If a medical device company’s data center goes offline even just for a minute, that could be the difference between life or death for a patient relying on the services. The amount of data processed on the internet every minute is staggering, and in just one minute of downtime we would lose out on everything below ($250k+ in Amazon sales and over 452 thousand hours of Netflix content streamed every minute).

In their 2018 report, IDC estimates that using the Cloud over on premise data centers allows organizations to keep productive time and revenue worth $32,316 per 100 users.

So it goes without saying that redundancies must be put in place. Both on-premise and cloud data centers are not immune to natural disasters, power outages, or even user error. So depending on how valuable the application or workload is, it is imperative to architect an application to be able to ‘failover’ to another data center.

The mechanisms for doing this can be quite complex, but effectively we start again at the first point of infrastructure provisioning. For a non cloud application, we are back to capacity planning, ordering hardware and servers, and configuring it. This time, however, the new data center is in a non-familiar remote location from the current data center. And unless we can leverage the new data center for other non critical workloads, we effectively have an unused data center until an emergency occurs.

Each of the major cloud providers have dozens of data centers - and within their data centers they have multiple Availability Zones - all with redundant power supplies. The Public Cloud providers have invested billions of dollars into additional dedicated fiber lines connecting every region. The modern public cloud data center is an absolute beast of engineering and complexity, and this video from Microsoft Azure CTO Mark Russinovich offers an unparalleled look into the details (long video but well worth the watch).

Problem #5 - Developer Productivity (and Happiness)

Ahh developers. Like everyone else we all want to work at a company with a mission we support and with people we enjoy working with. But two things I have found to be nearly universal - (1) developers want to make sure their work is used and appreciated, and (2) developers like shiny new things. And the cloud offers an endless suite of shiny new toys.

Need to integrate with a new API or set up an ETL job? It’s going to be a lot more exciting to setup a greenfield Kinesis pipeline, process messages with Lambda, and architect a new MongoDB schema.

Kidding aside, this is a very real thing. So many legacy applications from the early days of computing run on massive data center mainframes, and the oldest generation of programmers who grew up with COBOL are retiring, leaving complex computer programs that few people understand. The Cloud offers tools and products that facilitate rapid application development and deployment and abstracts away so many difficult problems, allowing for developers to work on application specific details.

Problem #6 - Out of the Box Integrations and Managed Services

The engineering that is being done at the major cloud providers is some of the most incredible, complex, and innovative work in the world. The internet as we know it came about only ~30 years ago, and the progress in that time has been exponential. Every day, thousands of some of the smartest, most talented engineers ship code to create new layers and abstractions in the cloud that everyone else can then build upon. It really is a massive Lego set, with each piece offering new functionality that can create whole worlds of new buildings and castles (in this Lego analogy).

AWS Offers more than 200 cloud services, spanning compute, storage, networking, analytics, and artificial intelligence. Azure and GCP offer equivalent numbers in their offerings as well. And all of these services are out of the box functionality for an enterprise, available at the click of a button, complete with options for infrastructure as code, monitoring, and all of the other benefits we have talked about (redundancy and failover, auto-scaling, and on-demand provisioning).

Software engineering moves at a rapid pace, with new technologies seemingly every year. And this pace of innovation contributes directly to all of the massive changes we see in society from a technological perspective every year - robotic manufacturing (and same day delivery), pharmaceutical discovery innovations, on demand cars and food delivery- so much of the technological innovation we have resides in and is possible because of the cloud.

All of these services that AWS, Azure, and GCP provide can be neatly summed up as the proverbial ‘undifferentiated heavy lifting’. This is all very complex work. But unless your company's core competency is creating database software, the incentive is to manage as little database software as possible and focus on the end user applications.

Problem #7 - Keeping Up with Rapid Changes in Technology

50% of the companies in the current S&P 500 index will be replaced in the next decade. And software is still eating the world. As TechCrunch alludes to, it is imperative for companies to advance their digital offerings and focus on their core competencies to remain competitive in the ever changing IT industry. Any effort wasted on maintaining old infrastructure or reinvesting the digital wheel can quickly mark the end of that company.

Keeping up with all of the advances in technology is a job itself for most companies. With cloud computing, companies can leverage the 'latest and greatest'. Need to experiment with Virtual Reality or Augmented Reality for your product? Need to leverage a new world class database service? All of the cloud providers offer a full suite of options for quickly taking advantage of this technology.

McKinsey offers a full list of cloud innovation opportunities that enterprises across all industries can take advantage of. We haven't touched on Artificial Intelligence in much detail yet, but this is what looms ahead for every business in the next decade. Ark Invest indicates that deep learning could contribute to new market capitalization in excess of $17 trillion over the next two decades.

Companies expecting to maintain market share already are and will increasingly be required to leverage technology to the fullest extent possible, and cloud computing will become absolutely necessary for all but the most prolific technology companies who may gain a competitive advantage from their own clouds (ie Tesla, Facebook).

Part III - Executing on Digital Transformation

It is easy to sit back and hypothesize about the future, about how cloud evolution - or any sort of digital transformation - will take place. The sales, execution, and implementation of any of these digital strategies is where the rubber meets the road and there is an incredible wealth of knowledge from all experts in the various fields. We have only scratched the surface here in a 101 style intro. Using the cloud is extremely complex, and at some point you will still get paged and be on a conference call with a support team from AWS/Azure/GCP about something they (hopefully not you) messed up. The cloud is not perfect by any means. But with all of the problems it addresses and solutions it provides, it is absolutely here to stay and will become an omnipresent part of all of our lives over the next decade.

Be sure to subscribe to the Exponential Layers newsletter and YouTube channel to get all updates on new videos and articles.

Comments ()